In the prior blog posts in this series, we provided a

step-by-step tutorial for migrating legacy Java EE applications to DC/OS in order to gain the

benefits of the DC/OS platform without requiring any code modifications. Assuming you've followed our instructions, your application has been successfully migrated and deployed, is running and healthy, and end users are happily enjoying its functionality. With the migration complete, our focus now shifts towards Day 2 Operations and ensuring the application scales to meet demand while remaining healthy and highly available.

If you recall, recoding the legacy application and redeploying WebLogic instances for high availability (HA) was an impossibility due to budget and schedule constraints.

In a traditional environment, each additional Java EE app instance requires waiting for a new virtual or physical machine to get imaged and made available, plus the time and effort required to configure routers and load balancers to recognize the new machine. Not only does migrating to DC/OS alleviate much of the pain around resourcing, our legacy Java EE app now runs on a single CPU core instead of the four underutilized CPU cores the original virtual machine consumed. That's good news for us because now we have plenty of resources to enable HA and scalability for our app.

Java EE App Operation: Scaling

Scaling your app to meet additional demand can take two forms, you can either:

In both situations, you must first make sure that the change will not introduce application downtime.

Let's see how we can add more capacity to our existing app.

Scaling Up: Adding Resources To existing instances

To add more capacity to our application, we will edit the application configuration. This can be done through the DC/OS UI, CLI or API. In this example we will do it through the UI.

All you need to do is edit the application configuration file and a new instance of the application will be launched with the new resources alongside the old application. Once the new application passes the healthcheck and is fully operational, DC/OS will route the traffic to the new instances and terminate the old instances to provide minimum disruption to application users.

Scaling Out: Adding more instances to an existing applications

To scale our application and add additional instances, we can use the DC/OS ‘Scale' function. To increase the scale of our application, all we need to do is log into the DC/OS management GUI, navigate to the Services tab, select our benefits application, and then click on the 3 dots on the right side of the screen to bring up the command menu as shown below.

Selecting the Scale command will present the Scale Service dialog box as shown below. All we need to do is set the number of desired instances, which in our case is just 2, and then click "Scale Service". With DC/OS, you can not only scale specific applications in this manner, but you can also quickly and easily scale whole groups and pods of applications.

We don't want to take an outage while scaling our application, and DC/OS makes this possible by keeping the original instance fully operational and accepting connections, while the new instance goes through the Staging, Running, and Health Check validation steps.

Our second instance of the migrated benefits application is now fully deployed. Based on the green Healthcheck indicator shown below, we know that our legacy application is running and ready to accept HTTP connections.

All applications running on DC/OS benefit from built-in high-availability features. In this example, DC/OS leveraged its built-in networking and load balancing between instances. Applications get a single configurable DNS entry (VIP: for Virtual IP), and all traffic destined for this entry is automatically load balanced to all healthy application instances. Unhealthy or unresponsive instances are automatically suspended from the cluster and restarted. Their DNS entry guarantees that they're accessible regardless of where they are dynamically deployed within DC/OS.

In the event that you wish to scale your app instance(s) down, simply reverse the steps listed above to remove and/or reconfigure instances of our Java EE app.

Can Java EE App Operations on DC/OS Really Be That Easy?

In short - yes! We added a new instance (and reconfigured the existing instance) of our Java EE application without first waiting for another virtual machine or physical machine to get deployed. We also did not have to reconfigure any routers or load balancers. The time required to scale up our application went from a week or so at best (or as many as 6 months in many organizations) down to just a few minutes and that even included checking that the instance is healthy. Not only have we saved resources as compared to our legacy environment, but we have also saved time - and redeployed a scalable and highly-available app.

The ease of deployment was made possible through DC/OS's built-in load balancing and ingress load balancer.

(Marathon-LB), which is based on HAProxy. Customers also have the option to use another load balancer, such as F5, for example. While our application instances are launched on dynamic ports, the integration with DC/OS load balancing and Marathon-LB handles routing the traffic to those dynamically assigned ports without operator intervention, eliminating port conflict and enabling more apps to be deployed on our cluster nodes.

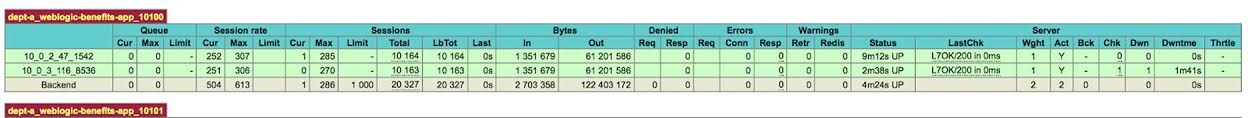

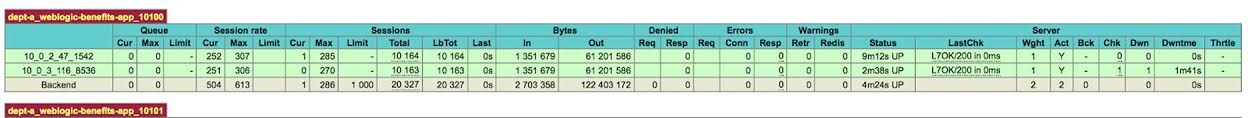

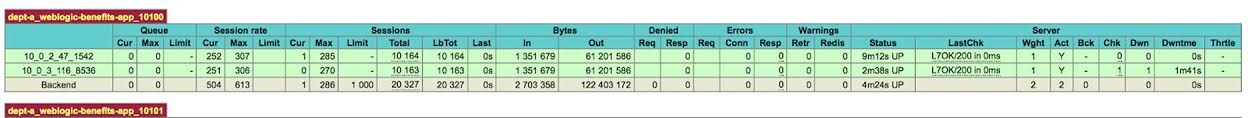

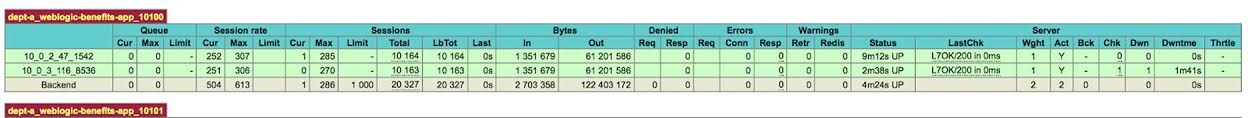

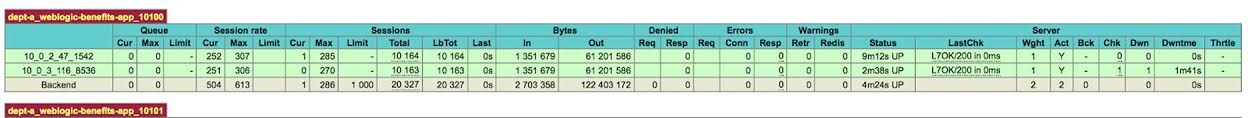

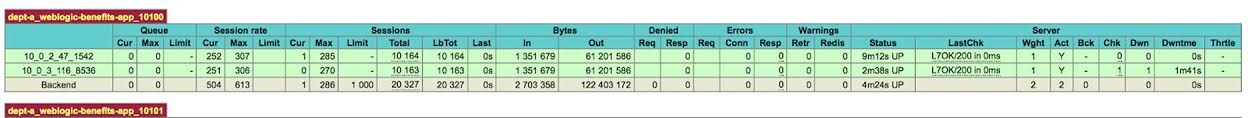

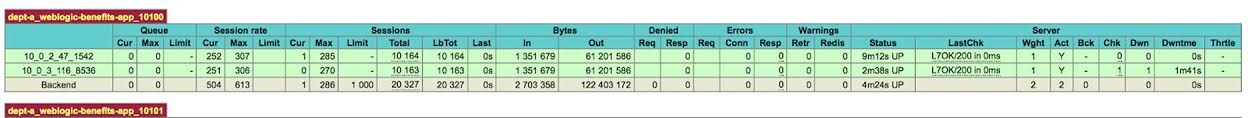

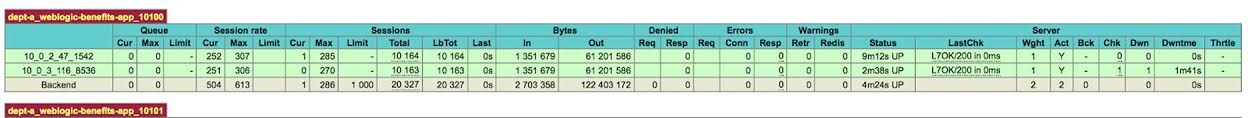

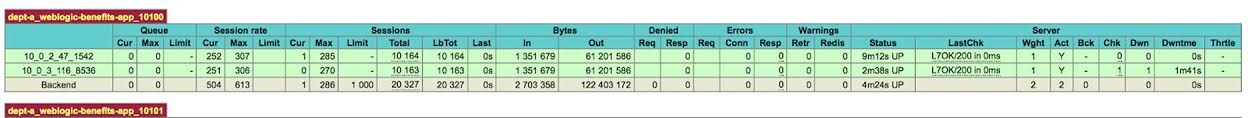

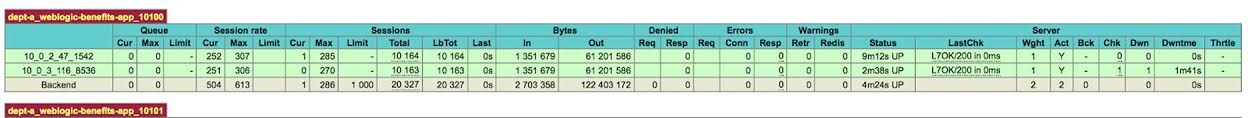

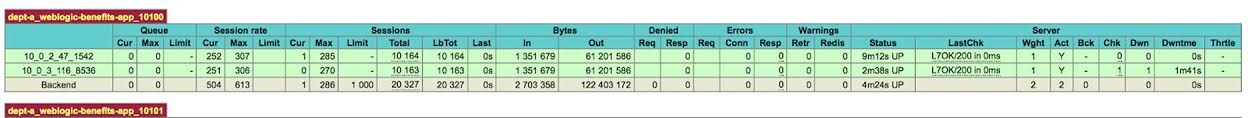

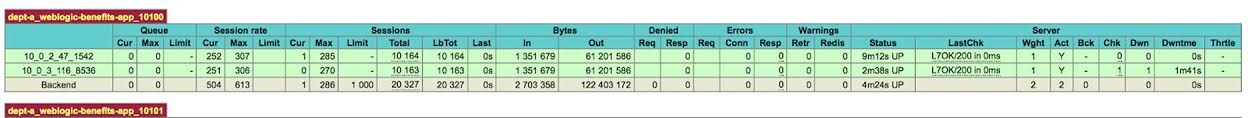

Digging deeper, we can view each app instance through the HAPROXY stats page (http://{your private ip address}:9090/haproxy?stats). Looking down the list of applications being handled from this instance of Marathon-LB, we see our application ‘dept-a_weblogic-benefits-app_10100'. We can see that any scale issues we might have had before have been resolved by adding a second instance as there are no requests in the queue and the requests are evenly balanced between the instance handled on host port 1542 and the instance on host port 8536. We've scaled our application to handle the additional load and to ensure there's a backup instance on our cluster in case of an outage.

Solving Java EE Nightmares with DC/OS