USE-CASE

Running Kubernetes Seamlessly Across Distributed Hybrid and Multi-Cloud Environments

See how DKP can get you up and running in hybrid and multi-cloud environments.

Benefits of Kubernetes in Hybrid and Multi-Cloud Environments

Enhanced Flexibility

Kubernetes is infrastructure agnostic, meaning that it works with any type of underlying infrastructure, whether it’s in a private cloud, public cloud, or any combination of them. And because it’s highly portable, Kubernetes can run workloads on both private and public clouds, as well as workloads that spread across multiple clouds, offering tremendous flexibility to deliver success where you need it.

Avoid Vendor Lock-In

For most companies, avoiding vendor lock-in is a core benefit requirement. Organizations operating a multi-cloud environment can avoid getting locked-in to a single cloud provider’s services, infrastructure, and pricing model, providing them with a greater range of choices to build an optimal business solution leveraging best-of-breed services.

Lower Costs

Organizations operating either a hybrid or multi-cloud environment can play off different cloud providers and internal resources to obtain the lowest cost. They can use certain services on particular clouds, but not be bound to those clouds for all services. And if they have large, expensive-to-move datasets in a particular environment, they can maximize the value of their existing investments in both on-premise and public cloud infrastructure by enabling compute to happen where the data is.

Meet Regulatory Requirements

Security-conscious organizations can solve data sovereignty challenges within their own private, on-premise environment without losing the benefits and speed of the cloud. Companies can choose which data and applications they want to deploy to the public cloud and what data they need to keep on their private cloud, while maintaining security and compliance.

Reduce Risk

In the event of service outages or spikes in usage, organizations can reduce risk by providing alternate resources for running applications. In both hybrid and multi-cloud environments, organizations can scale down capacity when it’s not needed and add capacity when there is greater demand, resulting in lower operational costs.

Challenges of Kubernetes in Hybrid and Multi-Cloud Environments

Lack of Centralized Visibility Across the Kubernetes Landscape

Operators need to have centralized visibility of the organization’s entire stack in order to effectively monitor cluster performance and overall spend. Because various parts of the organizations are adopting a wide range of approaches to using Kubernetes, there is often no centralized, overarching visibility into the clusters you are monitoring— which impacts the bottom line. If a cluster goes down, you can’t troubleshoot problems without losing valuable time. You can’t easily obtain infrastructure insights to deliver better resource utilization. And you don’t have a clear understanding of your Kubernetes resource costs. While cloud providers offer cost management and observability tools, it’s important to have unified visibility and cost granularity across multiple infrastructures.

Getting into Production is Manual and Time Consuming

In order to meet their business objectives, organizations need the digital agility that only cloud-native and Kubernetes can provide. However, getting Kubernetes into production is no easy feat. Delivering an end-to-end solution includes a variety of open-source technologies that can take months to select and integrate together. The collection of required open-source technologies needs to be integrated, automated, and tested for resiliency, which is expensive to build and maintain. And many public cloud providers don’t provide a catalog of open-source tools that are fully integrated out-of-the-box. Organizations need a Kubernetes solution that can get them to production as quickly as possible, so they can focus on building apps that matter to their business.

Managing Ongoing Day 2 Operations

Organizations need to leverage consistent automation to improve repeatability and time-to-market for new application needs. However, many Kubernetes solutions don’t provide a catalog of open-source tools that are fully integrated for out-of-the-box CI/CD pipelining. Manual deployments are time-consuming and require significant engineering effort. And when overseeing a large deployment, it’s easy to make mistakes, such as missing an error in code, misreading a line of text, or making changes directly on the server. All of which can lead to unplanned downtime and other quality issues down the road. Organizations need the ability to manage the lifecycle of their infrastructure and applications across all of the clouds they are using.

Lack of Governance and User Access Controls

When cluster sprawl is left unchecked, it can introduce all kinds of complexities around visibility, management, and security. How do you make sure clusters follow a certain blueprint that has the right access controls? How do you ensure sensitive information like credentials are distributed in the right way? How do you ensure the right versions of software or workloads are available? To do this effectively, organizations need an automated solution that can help them set the right policies between multiple clouds, or between multiple clusters running in the same environment, to avoid risk, reduce operational costs, while meeting internal and regulatory compliance requirements.

Lack of an Open Single Cloud Solution

Each private and public cloud platform has its own set of unique features and capabilities to build, run, and operate Kubernetes. These variations between clouds complicates the process of abstraction. Instead of one mutually comprehensive language, each cloud is speaking its own dialect, increasing the difficulty and friction of managing and moving workloads across multiple clouds. Enterprises require a flexible, single cloud solution to run their applications on multiple private or public cloud infrastructures, without lock-in. Without which, you’ll need to plan for each cloud environment independently, resulting in an increase in overhead and opportunity costs.

Limited Choices for Leveraging External Cloud-Native Expertise

Enterprises need to do things better, faster, and more affordably than their competitors, therefore they need a trusted partner who can help guide them, train them, design, and build a production-ready infrastructure that they can manage and feel confident using. In addition, they must have the ability to get external support for when difficult situations or complex use cases arise that require experienced outside help. With the limited options for leveraging external support, training, and services, the complexities can be a huge barrier to success.

How D2iQ Kubernetes Platform Delivers Success in Hybrid and Multi-Cloud Environments

Enhance Visibility Across the Kubernetes Landscape

Provide Real-Time Cost Management and Easier Troubleshooting

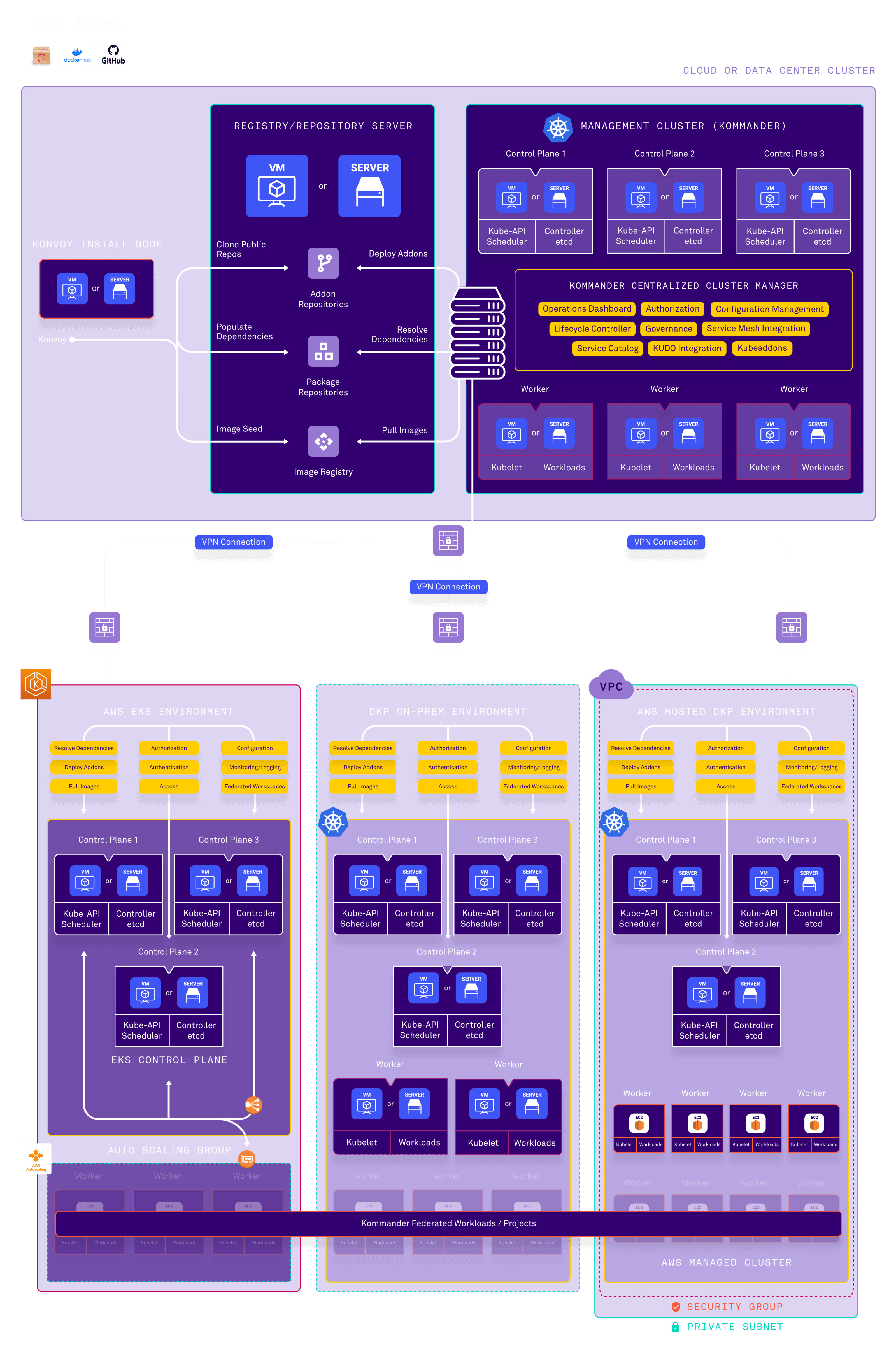

DKP’s federated management plane provides centralized observability of any Kubernetes cluster or distribution, wherever they are running, from a single, central point of control. DKP’s strong metrics-based monitoring and real-time alerts helps monitor cluster performance in complex hybrid or multi-cloud environments. Admins also have the ability to manage logs by tenant or workspace for more granular control and simpler troubleshooting of problems. DKP’s out-of-the-box Kubecost integration provides real-time cost management capabilities and the ability to correctly attribute costs by department, project, application, team or any other logical unit.

Accelerate Time-to-Market with Pure Upstream, Open-Source Kubernetes

Stand up Core Kubernetes Services in Minutes, Instead of Months

Engineered from the beginning to be an open platform, DKP is built on pure upstream open-source Kubernetes and includes all of the necessary supporting platform applications needed to run Kubernetes in production on any private or public cloud infrastructure. D2iQ does the difficult work for you by selecting the core platform services from the CNCF landscape, integrating them into the stack, automating their installation, and testing them to ensure they all work together. This makes it easier for teams to set up and consistently deploy applications with the speed and value of the cloud.

Simplify Application and Lifecycle Management

Embrace a Declarative Approach to Kubernetes Using GitOps

The move to Cluster API (CAPI), simplifies declarative infrastructure management using GitOps, automating many of the formerly manual processes required to keep systems running and scaling. DKP also integrates FluxCD to enable GitOps for both applications and infrastructure, supporting canary deployments and A/B rollouts. Embracing a declarative approach to Kubernetes makes deploying, managing, and scaling Kubernetes workloads more auditable and repeatable and less error-prone. With continuous delivery to multiple clusters from a single point of control, you can ensure consistent upgrades, deployments, and security policies across any hybrid or multi-cloud infrastructure with zero downtime.

Deliver Federated Management and Enterprise Access Controls

Ensure Consistency, Security, and Performance

DKP provides centralized governance and federated management to flexibly configure and manage users, roles, permissions, quotas, networks, and policies consistency across clusters. With DKP, admins can determine which users have access to which clusters, resources, or applications, to empower lines of separation, as well as limit or manage resource use to efficiently plan cluster capacity. Further, they have the proper security controls to govern pod network access and ensure conformance to sanctioned service versions of Kubernetes and its supporting platform services to reduce security exposure. All of which allows for greater standardization, security, and performance across clusters, without interfering with the day-to-day business functions and requirements that different clusters support.

Simplify Moving Workloads Across Multiple Clouds

Run Applications in Distributed, Heterogeneous Environments

DKP was created to be platform agnostic, meaning that you can run it on any infrastructure. It works out-of-the-box and in the same way in the cloud or on-premise, without having to tweak a particular environment. Rather than having a bunch of different flavors and dialects of Kubernetes that require admins to manage each environment differently, you can unify operations across distributed, heterogeneous infrastructures from a single, centralized point of control, making portability across different environments much easier.

Harness Premiere Domain Expertise

Drive Kubernetes Adoption Through Expert Services, Training, and Support

DKP provides domain expertise at every stage of the application lifecycle through its professional services, training, and end-to-end support offerings for both Kubernetes and the complete stack of platform services needed for Day 2 production. Rather than dealing with a myriad of open-core CNCF commercial vendors, you have a single partner to help you drive adoption, up-level existing teams, and troubleshoot issues. This holistic approach accelerates time-to-value, reduces TCO, mitigates the risk of adopting Kubernetes, and dramatically reduces the operational burden on enterprise teams.

Tjebbe de Winter

Managing Director at Cyso

Key Features and Benefits

Operational Dashboard

Provide instant visibility and operational efficiency into the Kubernetes landscape from a single, centralized point of control.

Centralized Observability

Provide enhanced visibility and control at an enterprise level, with comprehensive logging and monitoring across all clusters.

Granular Cost Control

Out-of-the-box Kubecost integration provides real-time cost management and attribution of costs to the right departments, applications, projects, or other organizational groups, for reduced waste, better forecasting, and lower TCO.

Service Mesh Integration

Add advanced networking capabilities, such as multi-cluster and cross-cluster service discovery, load balancing, and security across a variety of hybrid and multi-cloud environments.

Declarative Automated Installer

Accelerate time-to-production on any infrastructure with a highly automated installation process that includes all of the necessary open-source components needed for production.

Governance Policy Administration

Meet the requirements of security and audit teams with centralized cluster policy management.

Centralized Authorization

Leverage existing authentication and directory services for secure access and single sign-on to broad cluster based resources.

Cluster Autoscaling

Automatically scale up and down infrastructure capacity based on the resource needs of the running workloads.

Lifecycle Automation

Ensure consistent upgrades, deployment, and security policies for both infrastructure (through CAPI) and applications (through FluxCD).

Hybrid and Multi-Cloud Success Stories:

Hybrid and Multi-Cloud Resources:

Thank you. Check your email for details on your request.