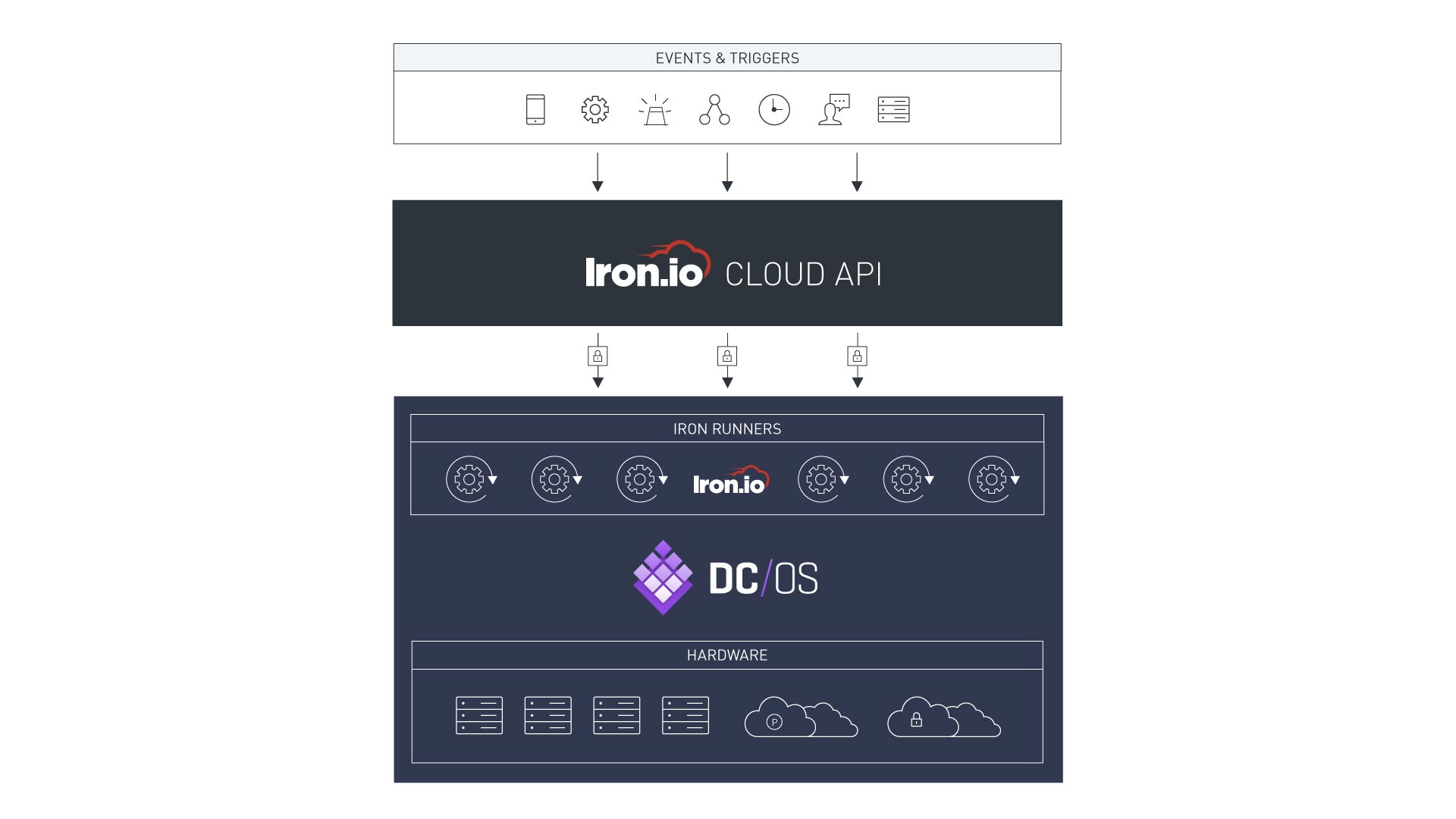

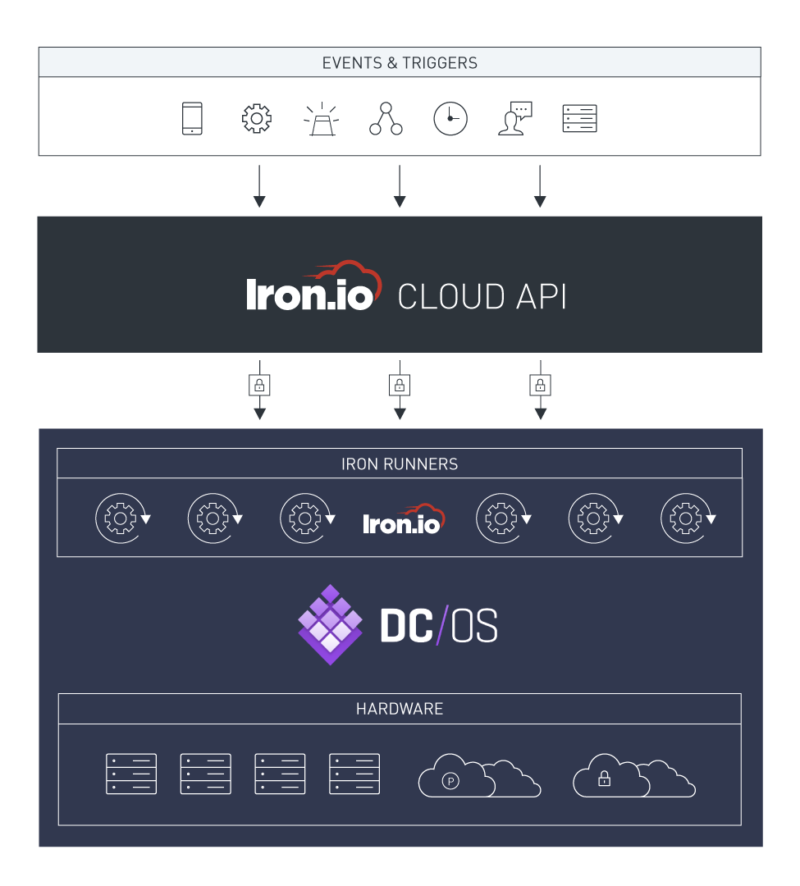

Iron.io customers already love the simplicity of the serverless Iron.io platform, providing a multi-cloud, Docker-based job-processing service. Using DC/OS with Iron.io, joint customers would see enhanced flexibility to develop their hybrid cloud strategy and run distributed job processing across heterogeneous environments. This would be a big win for enterprise customers who want to pair the scalability and operational ease of DC/OS with the serverless and microservices expertise of Iron.io.

This is why our two companies are working together to create a series of reference architectures for enterprises looking to adopt microservices at scale, for a variety of modern applications and workloads using a containerized infrastructure.

Benefit to customers

DC/OS drastically simplifies the process of running applications, including those built around Docker containers and big data systems. It is built on a foundation of Apache Mesos, which works under the covers to aggregate up to tens of thousands of machines into a single resource pool. DC/OS services are highly available, and users can easily deploy and manage distributed services, as well as massive collections of application containers, without configuring servers.

Iron.io provides a hosted service that can deploy workers across public and private cloud environments. These IronWorkers are instantiated in the appropriate target clouds and execute their business logic before being destroyed.

More often than not, IronWorker jobs connect to enterprise big data services and SQL/NoSQL databases. This is where the power of DC/OS can play a key role, by allowing infrastructure developers to build distributed systems that dynamically leverage a range of underlying resources, offering the following key benefits:

- Hybrid microservices: Both Iron.io and Mesosphere are able to support and run across public, private and hybrid cloud environements. This allows customers of all sizes from startups to large enterprises to deploy "Hybrid Microservices," allowing flexibility of workload pipelines and back-end data services to be assembled based on performance, security, price and other constraints that customers care about.

- Event-driven resource optimization: As IronMQ queues grow, they can set off triggers that can be used by DC/OS to expand the targeted resources.

- Polyglot languages, meet polyglot databases: The benefits of being polyglot has been widely publicised in cloud-native environments. Combining Iron.io's support for multiple languages with Mesosphere's ability to run a variety of big data and analytics platforms provides the greatest flexibility in the industry in a turnkey approach.

- Containers-as-a-Service (CaaS): Specifically for, but not limited to born-in-the-cloud SaaS companies, the evolution of their platforms to microservices can be accelerated through the use of a CaaS environment. Our combined solution provides comprehensive CaaS capabilities.

Enabling Iron.io IronWorker on Mesosphere

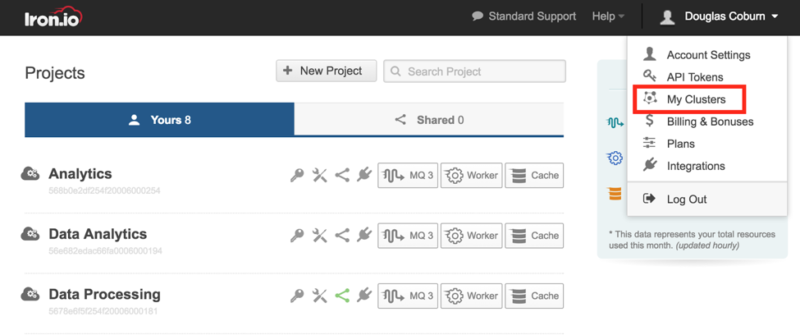

2. Contact sales@iron.io for access to the Hybrid Cluster configuration

4. Go to My Clusters

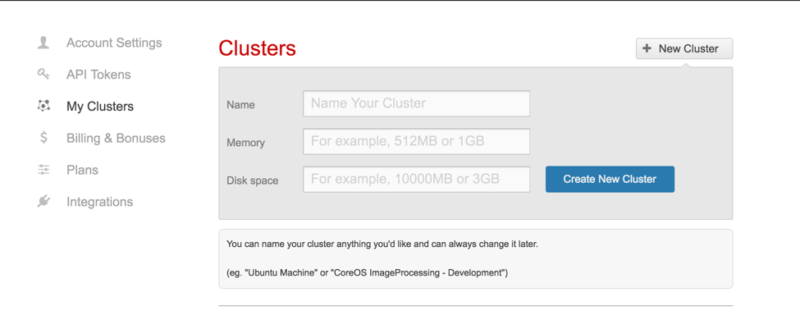

5. Select "create a new cluster"

- Name: Can be any name.

- Memory: This is the amount of memory to restrict the worker to for the workers in the cluster.

- Disk space: This is the amount of disk space the container for workers will have access to when running.

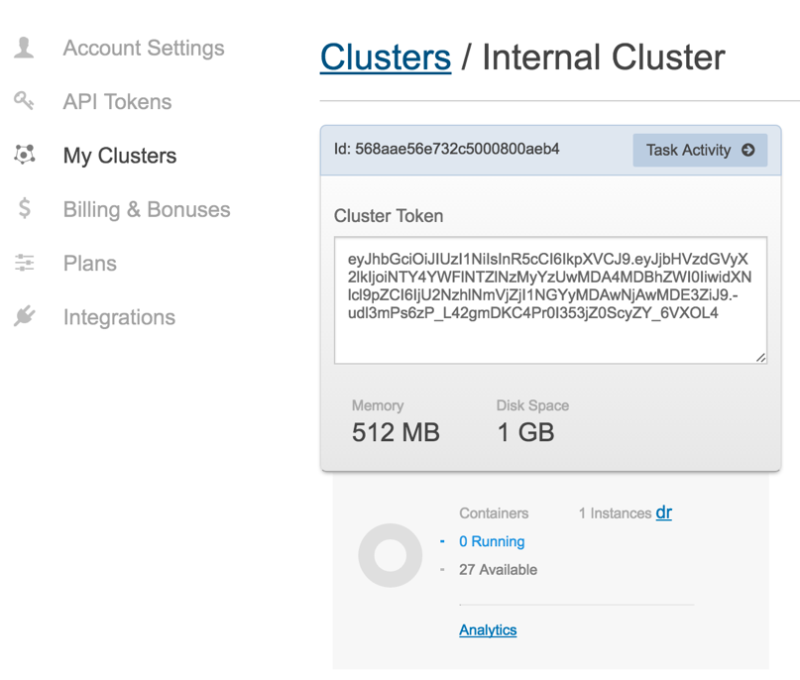

6. Select your cluster and keep track of the Cluster ID and Cluster Token as we will need these two items later.

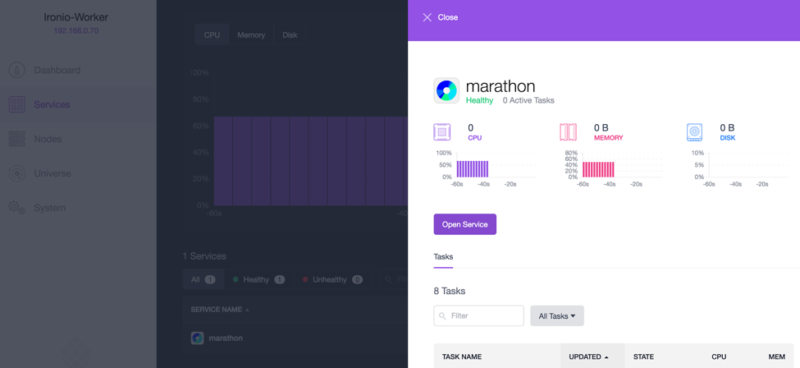

7. Log into your DC/OS cluster

8. Select "Services"

9. Click on "Marathon"

10. Select "Open Service"

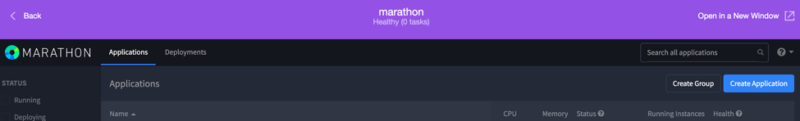

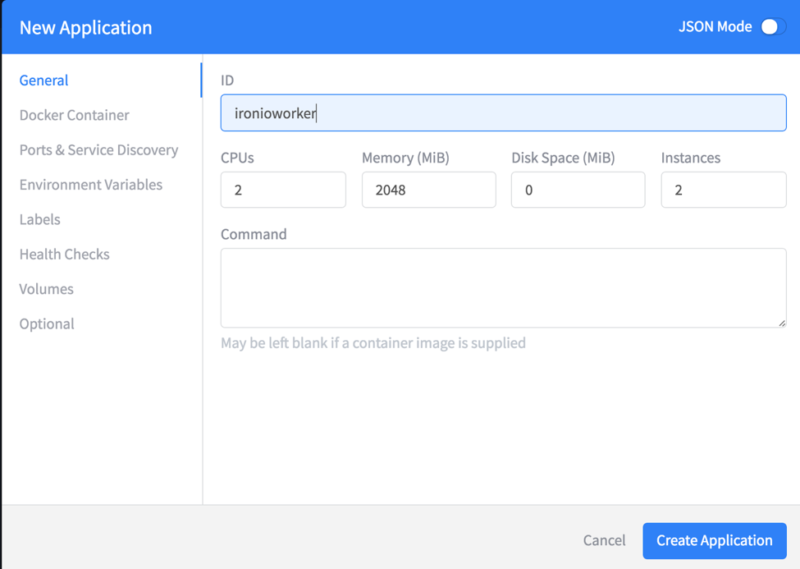

11. In the Marathon UI select "Create new Application"

12. In the "New Application" dialog, give the application any name

- You can specify the number of CPUs to make available to each container and the amount of memory.

- IronWorker will automatically break the memory up into workers based on the available memory. So if there is 2048 memory with 512MB workers, then there would be 4 workers per instance.

13. In Docker container

- Image: index.docker.io/iron/gorunner (This is the image that will be pulled for the container)

- Network: Any option should work as long as the container can connect outbound to 443 *.iron.io

- Select "extend runtime privileges"

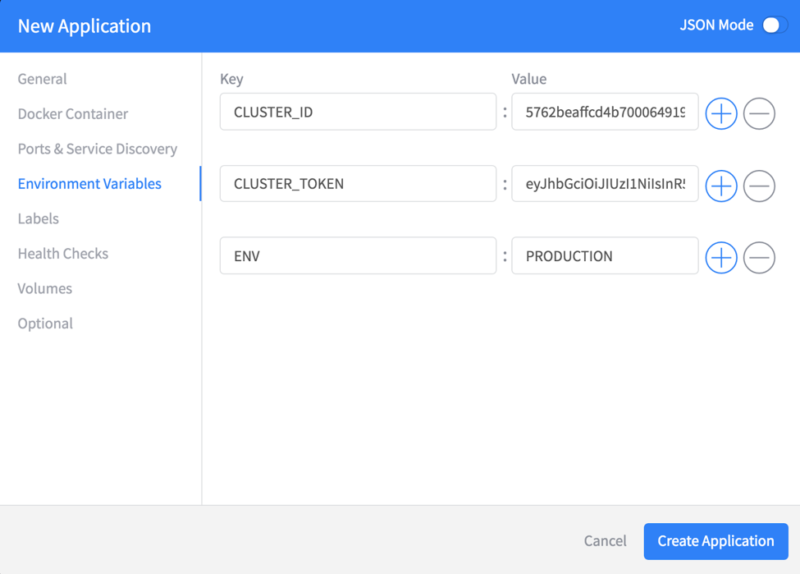

14. Select Environment Variables

Create three environment variables:

CLUSTER_ID=CLUSTERID (This is the value from the my clusters page)

CLUSTER_TOKEN=CLUSTERTOKEN (This is the value from the my clusters page)

ENV=PRODUCTION

15. Select "Health Checks"

We'll be creating 3 health checks:

Health check 1

- COMMAND: ps -Aef | grep -q ./runner

- Grace Period: 300

- Interval: 60

- Timeout: 20

- Max Consecutive Failures: 3

Health check 2

- COMMAND: ps -Aef | grep -q dockerd

- Grace Period: 60

- Interval: 60

- Timeout: 20

- Max Consecutive Failures: 3

Health check 3

- COMMAND: docker ps -a

- Grace Period: 300

- Interval: 60

- Timeout: 20

- Max Consecutive Failures: 3

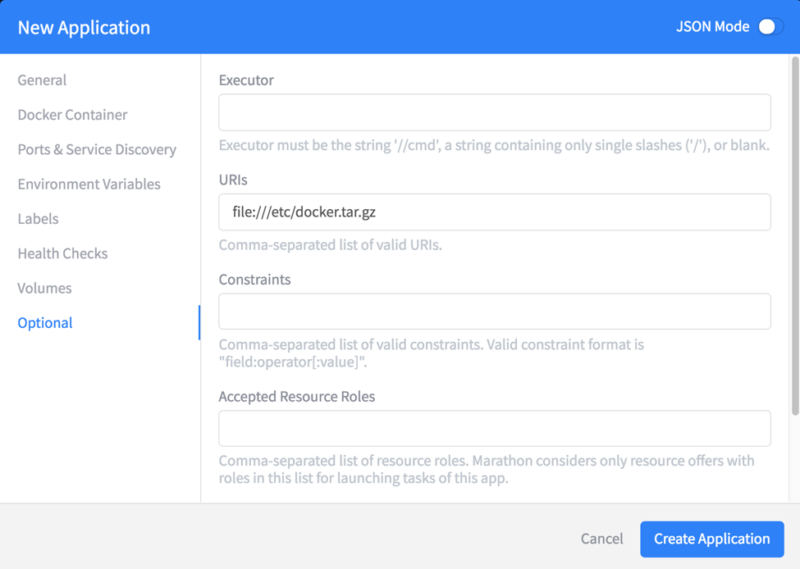

16. Select "Optional"

- On your DC/OS nodes you will need to do "Docker login" and login with the user who has access to the iron.io IronWorker Docker image. Once you have done that you will need to gzip the .docker folder with the config.json and make sure it is available on all of the nodes.

- Put the tar.gz file on each node where it is accessible to DC/OS.

- In URIs put the path to the file like: file:///etc/docker.tar.gz

17. Select "Create Application"

18. You can now click "Scale" to have instances created

Summary

We are truly excited about this partnership and the potential it holds for turnkey hybrid microservices deployments. I'm looking forward to seeing how customers innovate around our combined platforms. Please share your comments and feedback below about your use cases, additional areas we should focus on, or anything else really. And stay tuned for a lot more from this partnership in the near future.