Data scientists have a hard job to do. They have to find data, build and train statistical and machine learning models, integrate results into applications, generate reports...The list goes on and on. Providing the right set of tools running on top of the right infrastructure boosts productivity.

This is the first blog post in a series of Data Science on DC/OS tutorial blog posts. This post will cover the planned highlights for the series. If we're missing something that you'd like us to cover, let us know at datascience-at-mesosphere.io.

Building Scalable Analytics with DC/OS

DC/OS is an incredibly flexible and scalable platform for building analytic systems. It has many great features for general cluster management. Best of all, it makes it simple to set things up using containers. Often data scientists use an ensemble of specific versions of different tools and languages. For example, specific versions of Python, Java, and R may be required to work together for a particular pipeline. A container is an easy way to package them all together without affecting the production versions of other pipelines or applications. Making tool deployment easier frees data scientists from drudgery so they can focus on the analyses that yield real business value.

Setup and installation of many applications is almost as easy as installing an app on your phone. DC/OS has a catalog with pre-configured applications. Many applications that are popular with data scientists (e.g. TensorFlow, Spark, Flink, BeakerX) are included. If what you want isn't in the catalog, it's likely in a pre-existing docker container that you can easily add via a graphical interface.

In the coming months, we'll create content to walk people through many examples. We'll assume that the DC/OS cluster is up and running (see

DC/OS Installation) and focus on DC/OS features from a data science perspective.

Why DC/OS?

There are several reason why DC/OS is well-suited for data science, big data, and fast data projects:

- Many different tools, one platform. Modeling data is only one portion of a data pipeline. Accessing, exploring, cleaning/wrangling, modeling, and reporting are all required. The DC/OS service catalog makes it easy to deploy the necessary applications and data services. DC/OS is the glue that can connect all these functions.

- Scalability/Flexibility. Making many tools work together is great, but they also need to scale. The architecture of DC/OS makes adding nodes seamless for the data scientist.

- GPU isolation. Built-in GPU management means that data scientists can easily use the latest neural networks and not need to worry about manually allocating hardware resources.

- Simplified Operations. Data scientists have more valuable things to do with their time than manage complex infrastructure operations. In DC/OS, applications are self-contained so operations does not need a lot of additional information (and effort) from the data science team to take over management.

Future Content (Not Necessarily in Date Order)

Data Access

There can be no analytics of data without access to data. In most cases, DC/OS makes that easy. Many of the most common databases are available via the catalog. That means a few clicks in a graphical interface is all that is required to install PostgreSQL or MySQL. More scalable databases are also available via the graphical interface, e.g., CouchDB and Apache Cassandra. Also in the catalog are more use case specific databases like Neo4J for graph data or redis for an in-memory solution. HDFS is also supported. We won't cover them all, but we will cover real world use cases for some of them.

Data Exploration

Interactively working with data is often arduous. Because setting everything up on the server, especially interactive plots and tables can be difficult; it usually requires bouncing data back-and-forth between the servers and a local laptop. Laptops are often under-powered, so it further requires working with representative subsets of data to try to get a better understanding of the data as a whole.

Tools like BeakerX are easily installed via a GUI interface. BeakerX can create interactive plots and tables against HDFS, Spark, or many databases.

Data Cleansing/Wrangling

"Usually data is clean and ready to use from the start" - said no data scientist ever.

During the exploration process the data's problems are exposed. There's usually no complete escape from the drudgery of cleaning data. Tools like Openrefine can make data cleansing a little less of a grind. It has an easy to use graphical interface that simplifies complex behind-the-scene tasks.

After the one-off work of discovering the data cleansing and transforming steps, the steps can be automated into a scalable framework using tools like

p3-batchrefine. This allows the best of both worlds. Ease of manual exploration and automated batch processing.

Predictive Modeling, Machine Learning, and Deep Learning

Fun! There will be many posts regarding different tools and architectures for model training and delivery. The goal here is to demonstrate how different data science platforms can be constructed for different use cases. We'll look at batch vs. real-time API. Specific tools will include:

- Apache MXNet is a lean, flexible, and ultra-scalable deep learning framework that supports state of the art in deep learning models, including convolutional neural networks (CNNs) and long short-term memory networks (LSTMs).

- PyTorch

- Python is a swiss-army knife for data scientists. It can be used to train and score models, move or manipulate data. PyTorch is a deep learning framework that puts Python first and DC/OS makes it easy to run.

- R

- R is great for custom models and graphics but not always easy to scale. With DC/OS, however, there are many tricks to get R to run at scale. Models can be exported as PMML and then ingested into RESTful web services like Openscoring. Alternatively, models can be created in R with an H2O backend.

- H2O

- H2O scales and distributes well on it's own. Python or R can also integrate with H2O. Models created with H2O can be exported as compilable Java objects. After the object is compiled it can be used in many ways. E.g. A RESTful web services or a UDF in a Hive query. Adding DC/OS means it can easy access workflows and integrate into a complete solution.

Reporting

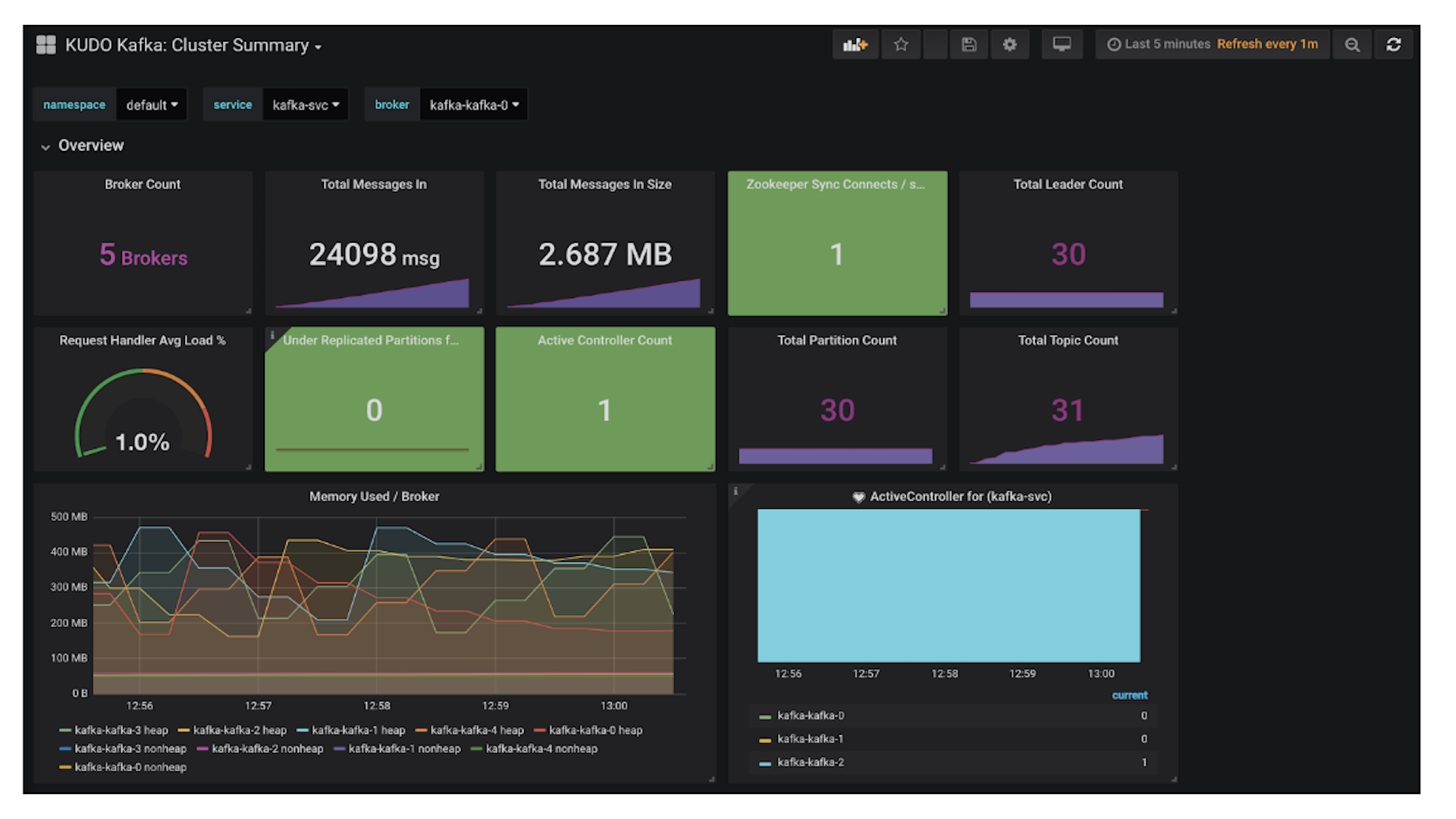

While not technically "data science" reporting often falls under the data science team. The best approach depends greatly on the volume of data and the use case. There'll be more than one post on this. We'll walk through a flexible self service reporting infrastructure. It seamlessly combines several different applications. We'll show how you can mix and match your own databases or graphing systems to make it best for you.

- First there is getting data from point A to point B. We'll look at moving data through different systems. The process can be very different for different types of logging. We'll look at various approaches for system logs, telemetry data, and business metrics. We'll start from different databases and merge all the data into a scalable system.

- Visualization.

- We'll look at multiple approaches to visualization. Many solutions such as BeakerX and Zeppelin are point and click inside of DC/OS. Some solutions allow you to go to a single place for all your reports while others show how to embed reports across your own internal website. DC/OS provides a complete infrastructure so you can securely provide interactive reports to both internal and external users.

Learn More About Distributed TensorFlow on DC/OS