Just because Kubernetes is tested to 5,000 nodes doesn’t mean your cluster, with all its monitoring/logging/etc. addons, or workloads are. Just because you built an efficient network and infrastructure doesn’t mean an overlay isn’t going to have abusive overhead. Currently we test on Kubernetes clusters as small as 1 node and as large as 600+ nodes. Furthermore, D2iQ’s Ksphere platform of products should have no issues scaling to 1,000 nodes.

Just because Kubernetes is tested to 5,000 nodes doesn’t mean your cluster, with all its monitoring/logging/etc. addons, or workloads are. Just because you built an efficient network and infrastructure doesn’t mean an overlay isn’t going to have abusive overhead. Currently we test on Kubernetes clusters as small as 1 node and as large as 600+ nodes. Furthermore, D2iQ’s Ksphere platform of products should have no issues scaling to 1,000 nodes.

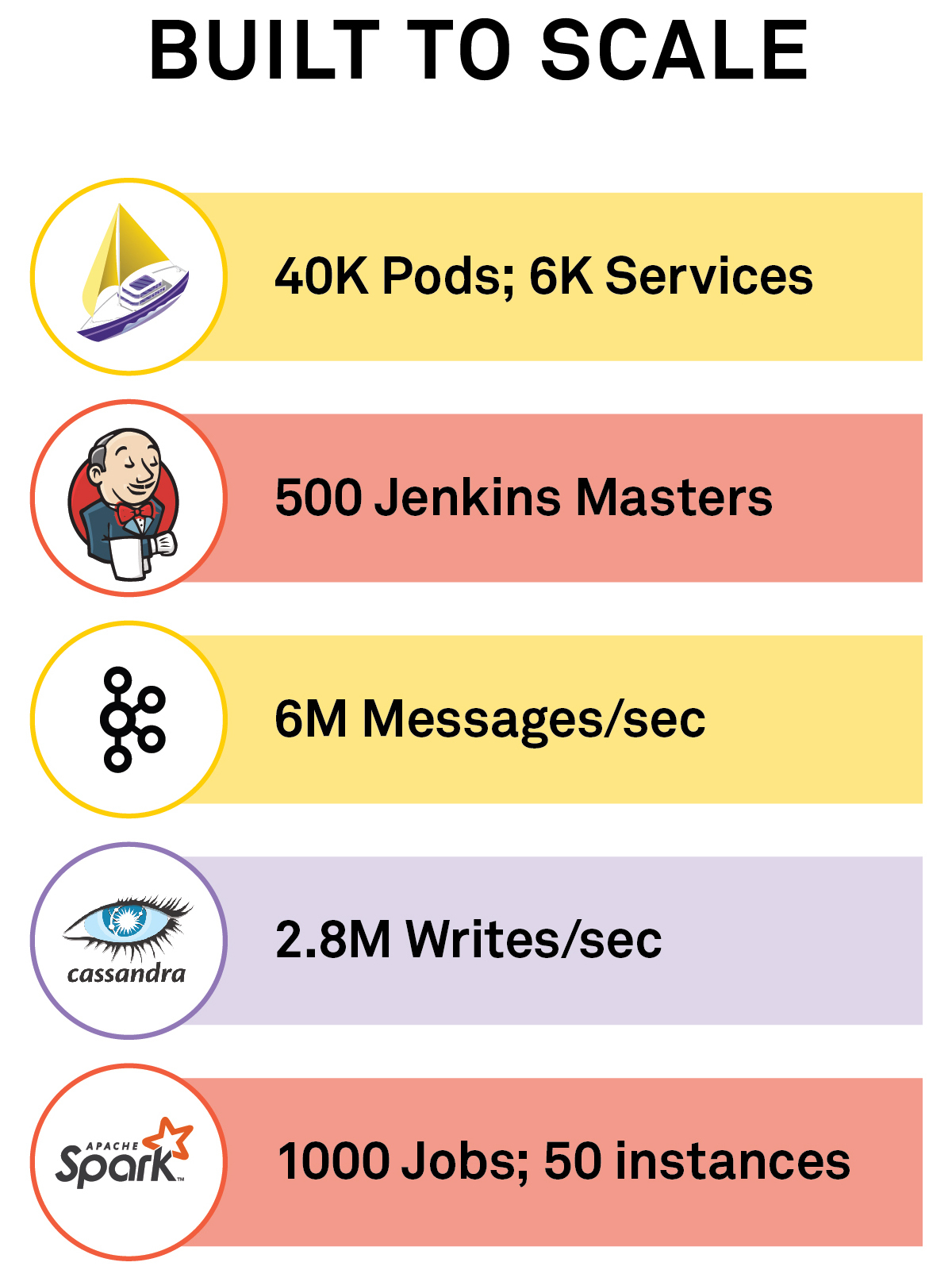

Here is what we are seeing in our own large-scale testing:

If a vendor can’t show you the receipts of the testing for your own guidance, then I’d treat them as suspect. Building new features, unit testing and the like is the easy stuff. Until you try to emulate the environments these features will be used in, including the scale they will be used at or the efficiency they will need to achieve, you just can’t know how everything will work in practice.

Cloud native platforms and workloads have a lot of moving parts with tons of comorbidities. Years ago we stopped assuming that just because something worked in some local test that it would work in customer settings. So we started working with our customers to define requirements to simulate their own environments—the scale, networking, and operations needed for a given workload. D2iQ tests to these specifications for both large and small environments, because our customers run at a lot of different scales.

This use case testing has helped us fix many issues before the products get to customers. Today I want to show you the receipts.

- For large scale testing, we use a cluster with over 600 nodes. We built features so Konvoy should be able to scale to 1,000 nodes, but along the way we found CoreDNS and networking improvements.

- Networking and Storage Efficiency - It is important to test for both small and large clusters. Our customers are very interested in storage and networking overhead. For networking we run a full array of networking benchmarking tools every month to minimize overhead.

- Connecting 100s of small and edge clusters - We provision, upgrade, and go through the entire lifecycle of many real clusters in Kommander. These clusters are scattered across different clouds, regions and availability zones.

- Dispatch and GitOps - Building and testing software is part of any company's core business. With multiple DevOps teams building many microservices, it's critical to schedule and process those build pipelines as rapidly as possible. Processing 100s of pipelines per minute, Dispatch leverages the scalability of the underlying Kubernetes cluster.

- Jenkins for large banks - Our customers have run as many as 500 Jenkins masters and 2,500 executors. We have tested to that level. It took a few tests and iterative improvements to get to that scale.

- Cassandra 2.8x of Netflix - In the last set of tests, we ran Cassandra across 4 data centers (yes, one Cassandra cluster across 4 data centers). It scaled to 2.8 million writes per second at its peak. For comparison, Netflix’s Cassandra database is around 1 million writes per second.

- Kafka trending toward Uber - Uber famously was running 1.1 trillion Kafka messages per day. We test at 6 million messages per second and while we don’t run the test for 24 hours, we would have had 400 billion messages in a day. There are no issues we are seeing at this scale and can likely scale up to 1.1 trillion with more resources.

- Spark and Fast Machine Learning - Spark is intrical part of a machine learning pipeline. Our Spark operator now hits 1,000 concurrent jobs with 50 instances running on Kubernetes. There are no current issues preventing us from testing at a larger scale.

Future: Testing Smaller Cluster with Large Nodes and Megatons of Pods

Some customers actually want to run Kubernetes with fewer nodes with very large core count. This large core strategy is not unusual among on premises customers and helps reduce cost compared to public cloud providers. Here customers would like to test over 200 pods per nodes, which is above the community testing for Kubernetes.

The cost advantages of switching to bare metal strategy, while relying on lightweight virtual machines in the cluster, Istio microsegmentation and Kubernetes network policies is clear and substantial.

How much does this testing cost?

A lot! We can buy a BMW every month for what it costs to test the platform and workloads. But we feel this is a necessary investment in our customers’ Day 2 success.