Since the release of 1.11, DC/OS has supported what they refer to as "

Kubernetes-as-a-Service" (Well done, marketing!). It is being pitched as a way to run managed Kubernetes clusters that provide additional out of the box features such as HA, security, and ease of deployments and upgrades. Ease the pain of the complexities in trying to run and manage Kubernetes clusters, while also taking advantage of running Kubernetes alongside other frameworks on DC/OS like Kafka, Elasticsearch, etc. This is very attractive to me because it adds yet another service that I can provide users while keeping everything inside of a single DC/OS Cluster; more offerings and keeping ALL microservices in harmony under the same hood. Very cool!

I have finally gotten a few minutes here and there over the last couple of days to begin playing around with this new offering and read up on the docs. In this post, I would like to share my experiences of my first few hours getting started with Kubernetes on DC/OS. I will share and discuss my experiences, explain some of the components, provide tips, and hopefully show how you can also get started using as well.

Deploying Kubernetes on DC/OS

First things first. We need to get Kubernetes up and running on our DC/OS cluster. Deploying packages and services to DC/OS is pretty nontrivial and simple. You have the ability to install packages from either the

DC/OS Catalog or from the

CLI. Kubernetes is no different and supports both options as well. For this post we will be using the CLI since we will be needing the "kubernetes cli" package installed so that we can easily take advantage of configuring "kubectl" later.

My first deployment that I did was the

quick start example (accepting ALL defaults) just to see what would happen when I deployed it and to get a feel for what to expect. Would it actually work as expected?

dcos package install kubernetes

It worked! It stood up all the

necessary components (See prereqs section) in the cluster in less than 10 mins. But, the quick start is really no better than running

minikube locally. It did prove that it would deploy with no issues, though. I was really set out to see a true managed HA Kubernetes cluster that I could potentially take to production someday.

I removed the current deployment and took to the docs for

Advanced Installation and about 5 mins later I came up with the following JSON to fit my current needs for a nice "production" style testing ground (see options.json in the git repo above):

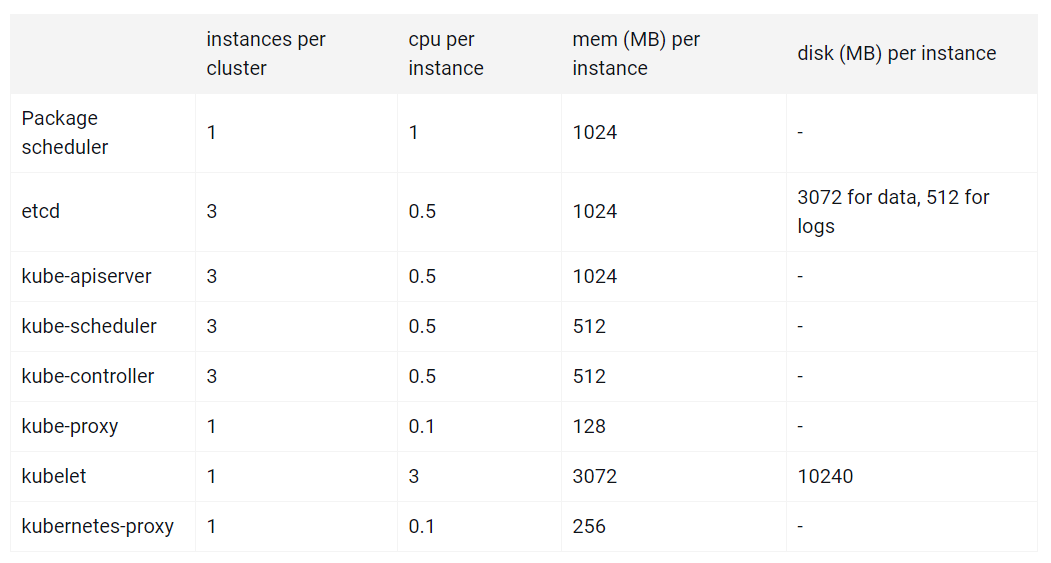

{ "service": { "service_account": "kubernetes", "service_account_secret": "kubernetes/sa" }, "kubernetes": { "high_availability": true, "node_count": 4, "reserved_resources": { "kube_cpus": 20, "kube_mem": 20000, "kube_disk": 10500 } }}

To note, I am using the Enterprise Edition of DC/OS so I am utilizing the service account option. You can see the

Advanced Install Docs and the git repo for more information on the Kubernetes service account above.

From the JSON above, you can see that Mesosphere has provided us with a "high-availability" key that will deploy Kubernetes components in HA. You then specify the "node_count", which is the number of K8s worker nodes and how much cpu, memory, and disk you would like to reserve on the DC/OS cluster for each worker node to run Kubernetes services. When deployment is complete we should end up with an HA Kubernetes cluster with 4 worker nodes each given 20 CPUs, 20 GB of Mem and 100 GB of Disk. NOTE: We aren't doing anything for ingress on this yet since it is not part of the default installation.

dcos package install kubernetes --options=options.json

Huzzah! Everything worked without issue and only took about 12 mins to completely install all components needed for HA!

I will have to say that the deployment of Kubernetes on DC/OS is one of the more impressive things that I have seen performed on the platform. It literally could not have been more simple or seamless. I am not the most experienced Kubernetes user, but I have played around with it enough and understand its many components and complexities. Mesosphere did a great job on this.

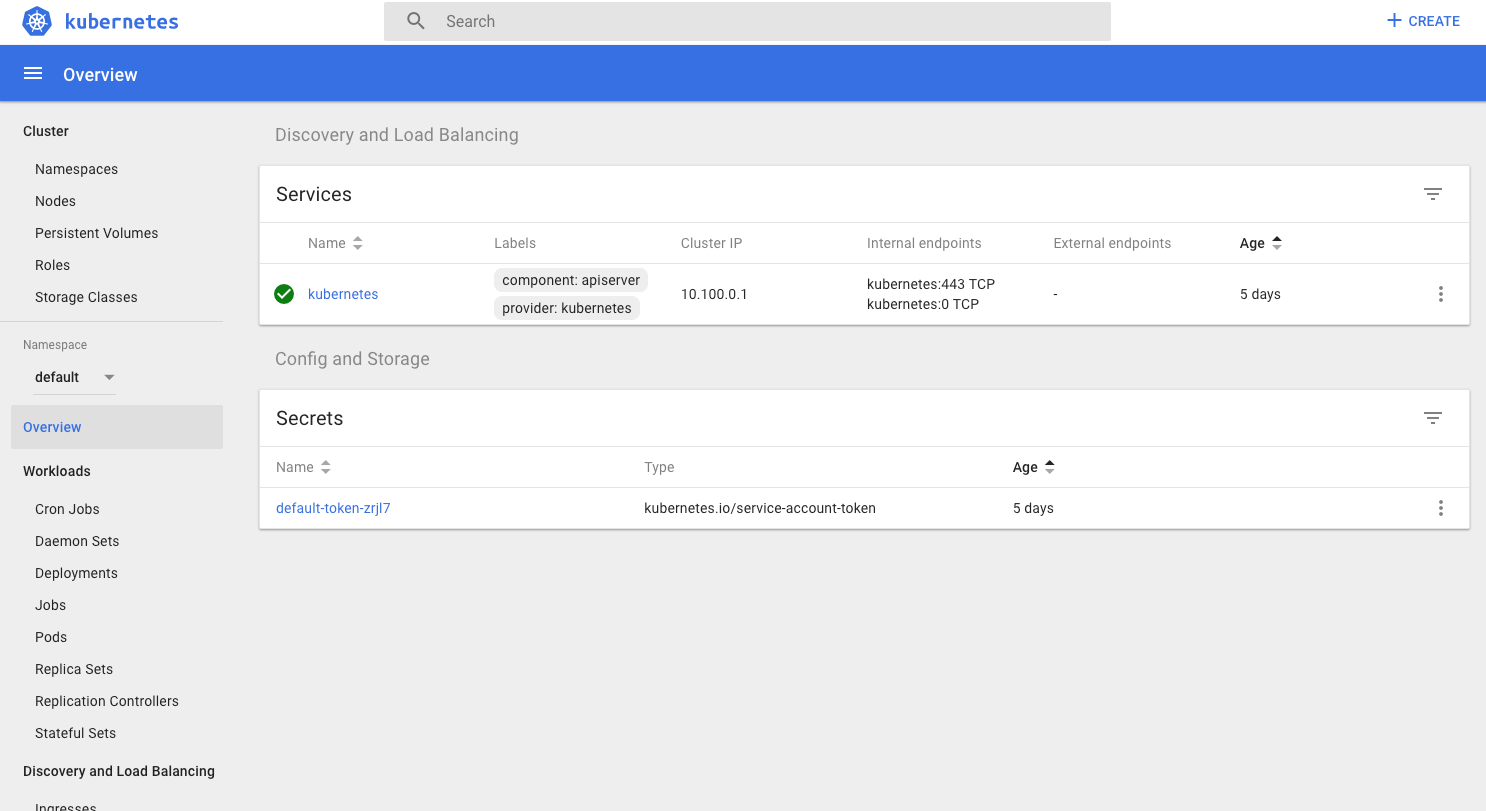

Kubernetes Dashboard

One nice feature that is provided with the Kubernetes deployment out of the box is the Kubernetes dashboard. I'm not going to spend a whole lot of time on this, but it is definitely something that should be mentioned since it is a must in any Kubernetes environment. Once you have your K8s cluster up and running, you can access the dashboard by going to the following URL in your browser: http://${DCOS_URL}/service/kubernetes-proxy/.

Access is managed through the DC/OS UI and Admin Router service and looks and acts just like you would expect.

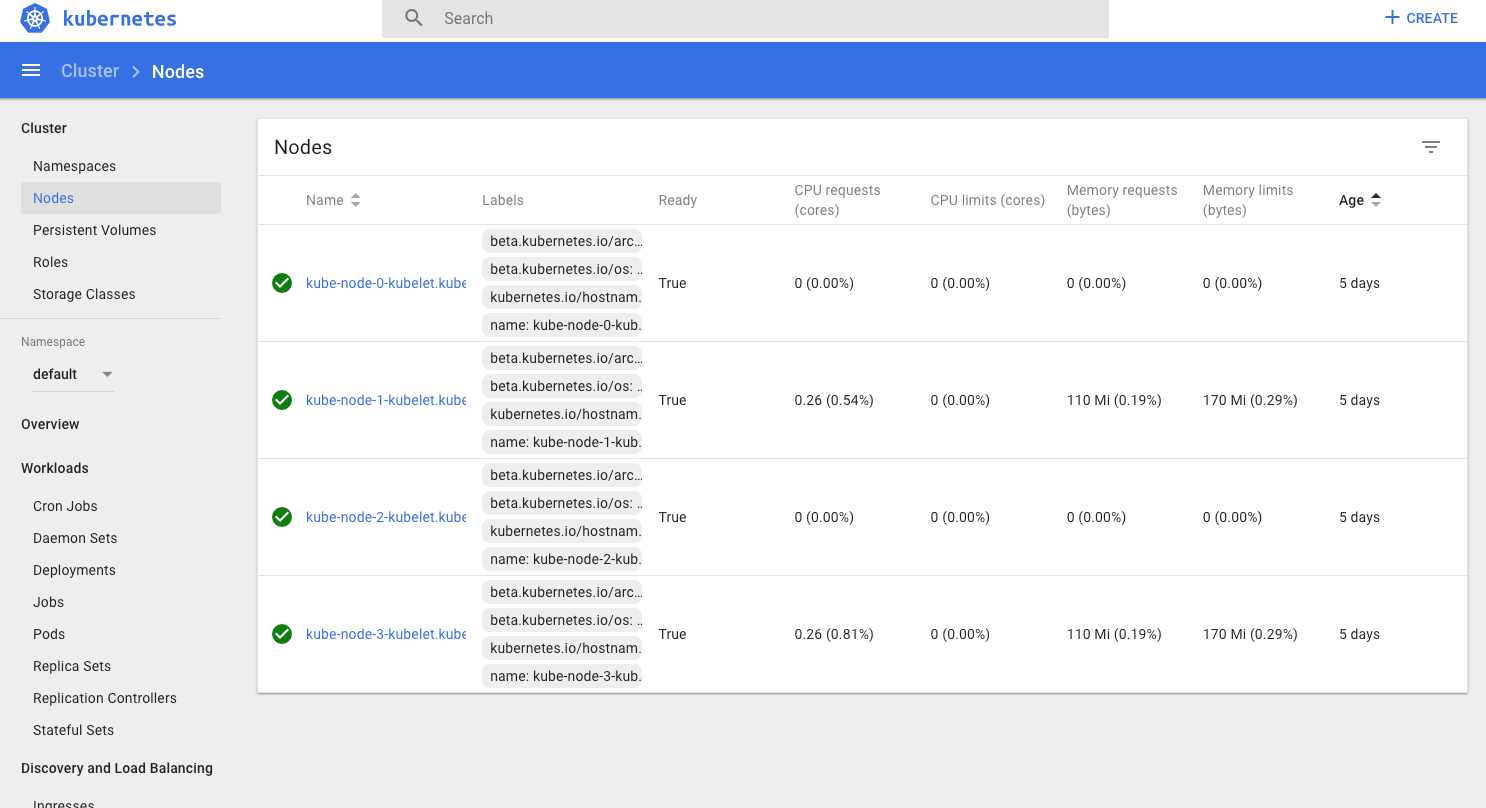

And you can see that I have 4 woker nodes below.

This is nothing special, but I thought I should mention that it comes as part of the installation and how to access it. Most of the time this is yet another component that you have to setup on your own when you stand up your own K8s cluster. Very happy so far.

Kubetctl

The "Kube-Cuddle" tool (I pronouce "kube-C-T-L"). If you have ever played with a K8s cluster or have ever setup minikube, chances are you already have this command line tool installed. I really love using this tool and already had it installed on my machine, but you definitely need to have this installed to follow along and/or do anything beyond what we have already talked about. You can obtain

installation from here based on your OS needs. You will also need to have the

DC/OS CLI installed as well to make configuring your kubectl environment very simple.

Once you get kubectl installed and you have the DC/OS CLI installed, you are ready to configure kubectl to access your cluster.

Install the DC/OS Kuberenetes CLI:

dcos package install kubernetes --cliExecute the following to allow the DC/OS CLI automatically setup access to your cluster:dcos kubernetes kubeconfigkubeconfig context 'dcos-ops' created successfullyValidate by running a few commands:kubectl get nodesNAME STATUS ROLES AGE VERSIONkube-node-0-kubelet.kubernetes.mesos Ready <none> 5d v1.9.7kube-node-1-kubelet.kubernetes.mesos Ready <none> 5d v1.9.7kube-node-2-kubelet.kubernetes.mesos Ready <none> 5d v1.9.7kube-node-3-kubelet.kubernetes.mesos Ready <none> 5d v1.9.7kubectl cluster-infoKubernetes master is running at https://${DCOS_URL}/service/kubernetes-proxyKubeDNS is running at https://${DCOS_URL}/service/kubernetes-proxy/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

Really simple and I didn't have to do much. Note that you can set up multiple environments and contexts. You will likely, at some point, have to re-run the kubeconfig to re-gain access since by default you will lose dcos authentication and need to log back in.

Now that we have access to interact with our Kubernetes cluster, let's start slinging some deployments in there and start playing around. This should act just like any K8s cluster.

Launch a simple deployment:

kubectl run nginx --image=nginx --port 8080deployment.apps "nginx" created kubectl get pods NAME READY STATUS RESTARTS AGE nginx-b87c99f86-9lfnn 1/1 Running 0 14sLet's delete the current pod and ensure that it comes back:kubectl delete pods nginx-b87c99f86-9lfnnpod "nginx-b87c99f86-9lfnn" deletedkubectl get podsNAME READY STATUS RESTARTS AGEnginx-b87c99f86-9lfnn 0/1 Terminating 0 1mnginx-b87c99f86-zpjg6 0/1 ContainerCreating 0 2skubectl get podsNAME READY STATUS RESTARTS AGEnginx-b87c99f86-9lfnn 0/1 Terminating 0 1mnginx-b87c99f86-zpjg6 1/1 Running 0 10skubectl get podsNAME READY STATUS RESTARTS AGEnginx-b87c99f86-zpjg6 1/1 Running 0 14sCool! Nothing special, but it is doing things as expected.How about scaling?kubectl get deploymentNAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGEnginx 1 1 1 1 3m<kubectl scale --replicas=20 deployment/nginxdeployment.extensions "nginx" scaledkubectl get deploymentNAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGEnginx 20 20 20 1 4mkubectl get podsNAME READY STATUS RESTARTS AGEnginx-b87c99f86-2rqbp 1/1 Running 0 9snginx-b87c99f86-5zqmx 1/1 Running 0 8snginx-b87c99f86-6ncqg 1/1 Running 0 9snginx-b87c99f86-8dhfb 1/1 Running 0 8snginx-b87c99f86-9596z 1/1 Running 0 8snginx-b87c99f86-bs96v 1/1 Running 0 9snginx-b87c99f86-cqlmf 1/1 Running 0 8snginx-b87c99f86-crdnx 1/1 Running 0 8snginx-b87c99f86-fc6ph 1/1 Running 0 8snginx-b87c99f86-fzszg 1/1 Running 0 8snginx-b87c99f86-kbqmz 1/1 Running 0 9snginx-b87c99f86-lk669 1/1 Running 0 8snginx-b87c99f86-m4kgz 1/1 Running 0 9snginx-b87c99f86-mfgns 1/1 Running 0 8snginx-b87c99f86-nph4v 1/1 Running 0 9snginx-b87c99f86-pthd5 1/1 Running 0 8snginx-b87c99f86-qfr86 1/1 Running 0 9snginx-b87c99f86-rkpzr 1/1 Running 0 8snginx-b87c99f86-z9n6g 1/1 Running 0 8snginx-b87c99f86-zpjg6 1/1 Running 0 3mkubectl delete deployment nginxdeployment.extensions "nginx" deletedkubectl get podsNAME READY STATUS RESTARTS AGEnginx-b87c99f86-5zqmx 0/1 Terminating 0 4mnginx-b87c99f86-9596z 0/1 Terminating 0 4mnginx-b87c99f86-bs96v 0/1 Terminating 0 4mnginx-b87c99f86-kbqmz 0/1 Terminating 0 4mnginx-b87c99f86-lk669 0/1 Terminating 0 4mnginx-b87c99f86-pthd5 0/1 Terminating 0 4mnginx-b87c99f86-qfr86 0/1 Terminating 0 4mnginx-b87c99f86-z9n6g 0/1 Terminating 0 4mnginx-b87c99f86-zpjg6 0/1 Terminating 0 7m

Again, absolutely nothing special going on here but you can see that it is doing things. It is working as it should without really any sweat, blood or tears at this point. I really like how easy it is to setup interaction with the K8s cluster with just issuing a couple of commands. Very simple so far.

One thing that I think would be really nice to see here, especially as an Ops or Admin of the DC/OS Cluster, is a way to see the Kubernetes services running from within the DC/OS UI. I could potentially see it getting confusing trying to track down different services between the different orchestrators (Marathon and Kubernetes). Not a huge loss, of course, but trying to keep ALL the things under one hood would be really nice.

Communications to ALL the things on DC/OS

To me, this is the most interesting feature of running Kubernetes on DC/OS. Being able to provide my users with more options for managing their microservices. All microservices and big data services in a shared cluster living in harmony and able to interact with and utilize one another easily. Example: services running on Kubernetes can utilize the Kafka Cluster which are both running on DC/OS and vice versa. By easily I mean with little customization and effort on the users end. DC/OS already makes this possible with the way that its handles service discovery, DNS, and Networking with features such as VIPs and Overlay.

Let's take a look at how to interact with services running in Kubernetes and other services running in DC/OS. Let's create another deployment with an exposed port as a fall back since we know we can use NodePort for a persistent URL:

kubectl run nginx --image=nginx --port=80deployment.apps "nginx" createdkubectl expose deployment nginx --port=80 --target-port=80 --type=NodePortservice "nginx" exposedkubectl describe services nginxName: nginxNamespace: defaultLabels: run=nginxAnnotations: <none>Selector: run=nginxType: NodePortIP: 10.100.124.239Port: <unset> 80/TCPTargetPort: 80/TCPNodePort: <unset> 30291/TCPEndpoints: 9.0.1.79:80Session Affinity: NoneExternal Traffic Policy: ClusterEvents: <none>

So a couple things that I am interested in finding out:

- How can other services running in DC/OS interact with services in Kubernetes? Other DC/OS Services → Kubernetes Services

- How do Kubernetes services communicate with other Services running on the DC/OS Cluster? Kubernetes Services → DC/OS Services

Out of the box DC/OS provides all of its services with a DNS based service discovery mechanism. This allows for easy service communication and load balancing. I won't go into a ton of detail (

more info here) about how this works or explain the different options provided, but what is important to know here is that you can resolve the services running on DC/OS under the following naming convention:

<service-name>.<group-name>.<framework-name>.mesos

There are better ways of doing this, like via VIPs but we must explicitly specify this since its not a default. But does this work for Kubernetes services running on DC/OS as well?

Unfortunately, it does not unless I am missing something (definitely possible). I tried every naming convention I could think of and could not resolve any of them. I even hit the

MesosDNS api in search of anything named "nginx" in my cluster and nothing came back. This tells me that either I am missing something or the services running in Kubernetes are not entering MesosDNS and/or it is using its own.

But, how about about the endpoint (9.0.1.79:80) and the container IP (10.100.124.239) from the "describe" output above? Can our other services on DC/OS interact and communicate with those?

The Answer is Yes.

curl 9.0.1.79:80<!DOCTYPE html><html><head><title>Welcome to nginx!</title><style>body {width: 35em;margin: 0 auto;font-family: Tahoma, Verdana, Arial, sans-serif;}</style></head><body><h1>Welcome to nginx!</h1><p>If you see this page, the nginx web server is successfully installed andworking. Further configuration is required.</p><p>For online documentation and support please refer to<a href="http://nginx.org/">nginx.org</a>.<br/>Commercial support is available at<a href="http://nginx.com/">nginx.com</a>.</p><p><em>Thank you for using nginx.</em></p></body></html>curl 10.100.124.239:80<!DOCTYPE html><html><head><title>Welcome to nginx!</title><style>body {width: 35em;margin: 0 auto;font-family: Tahoma, Verdana, Arial, sans-serif;}</style></head><body><h1>Welcome to nginx!</h1><p>If you see this page, the nginx web server is successfully installed andworking. Further configuration is required.</p><p>For online documentation and support please refer to<a href="http://nginx.org/">nginx.org</a>.<br/>Commercial support is available at<a href="http://nginx.com/">nginx.com</a>.</p><p><em>Thank you for using nginx.</em></p></body></html>

The above outputs are from attaching and executing curl from within a container running on DC/OS using Marathon. A couple of things to note here: the endpoint address being used, is actually coming from the

DC/OS Overlay network that we create when provisioning our cluster and is exposed at the port that we specified. The IP being used is a K8s default that gets assigned from the network that K8s is using since all containers get IP addresses by default in on a K8s cluster. We can use these for communication but they are likely to change at some point.

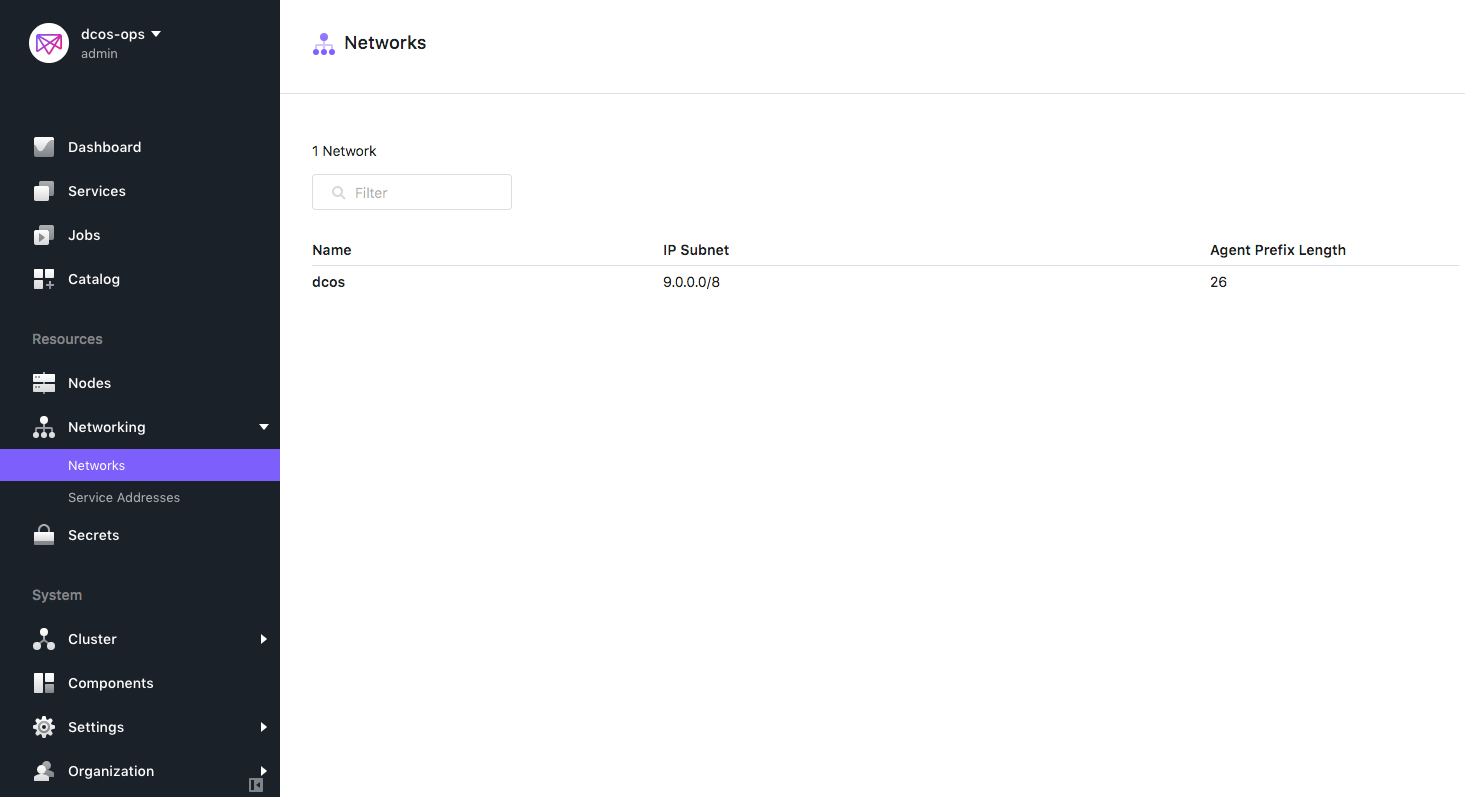

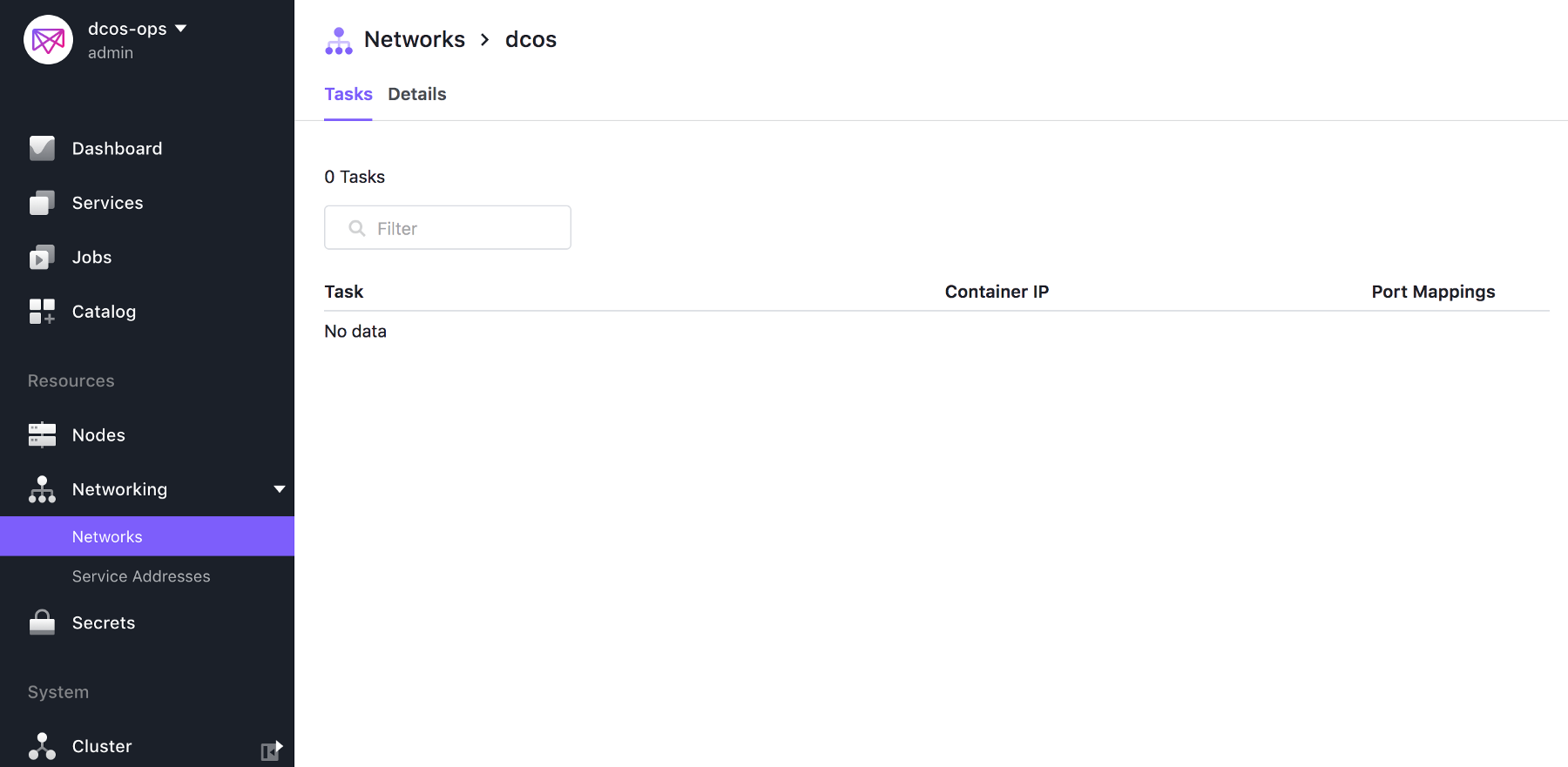

So if the endpoint is coming from DC/OS Overylay I should be able to see it from the DC/OS UI under my Networking Tab and be able to resolve the FQDN? Let's see.

Hmmm… I am not seeing the "nginx" service running in Kubernetes in the DC/OS Overlay UI, but I am able to hit it from a service running outside of Kubernetes using the IP from the overlay network (endpoint). I am going to have to do a bit more research on this piece, but at least for the time being I know that it is possible for other services running on DC/OS to use the services running in Kubernetes. Just couldn't figure out how to use the FQDN or anything that would be persistent.

How about vice versa? How do services running in K8s communicate with other services running in DC/OS? This turned out to be extremely simple and is no different than what you are probably already doing. We choose to use

VIPs for our services.

So let's connect to the nginx container running on Kubernetes and try to hit one of our services running on DC/OS outside of Kubernetes:

kubectl exec -it nginx-7587c6fdb6-vzlqp /bin/sh### Omitting installation of some packages here ##### curl dotnet.marathon.l4lb.thisdcos.directory:8000<!DOCTYPE html><html><head><meta charset="utf-8" /><meta name="viewport" content="width=device-width, initial-scale=1.0" /><title>Home Page - aspnetapp</title>.........</body></html># nslookup dotnet.marathon.l4lb.thisdcos.directoryServer: 198.51.100.4Address: 198.51.100.4#53Name: dotnet.marathon.l4lb.thisdcos.directoryAddress: 11.220.254.231

So you can see from the output above that it is extremely simple for services running in K8s to resolve and communicate with other services running in DC/OS. Awesome!

So for service discovery and networking, it appears that it is very easy for Kubernetes services to hit other services in DC/OS but not so much for vice versa. As I mentioned before, I may be missing a key piece here because I am not a Kubernetes expert. Perhaps this is currently a limitation for interacting with services running in Kubernetes? Either way, there are simple ways around this for now such as utilizing

NodePort but it would still be nice to see ALL services using the DC/OS Overylay network be visible in the DC/OS UI and/or resolvable. Ill get back to the docs and the community to see if anyone can help me out on this!

To Do

Not too bad for the first couple of hours! There is still much to do and much to figure out. I have given myself a "To Do" list of things that I need to figure out that I will likely do a write up at some point when I get time.

- Upgrades — How to upgrade K8s clusters when new versions are released.

- Multi K8s Clusters — This is supposed to be coming in later releases on DC/OS. Managing multiple K8s clusters in DC/OS. Not sure if this makes sense yet but I could see a use case for allowing a separate K8s cluster to each development team.

- Ingress — Setting up an Ingress to external DC/OS cluster communication into K8s. Lots of different options to look at here.

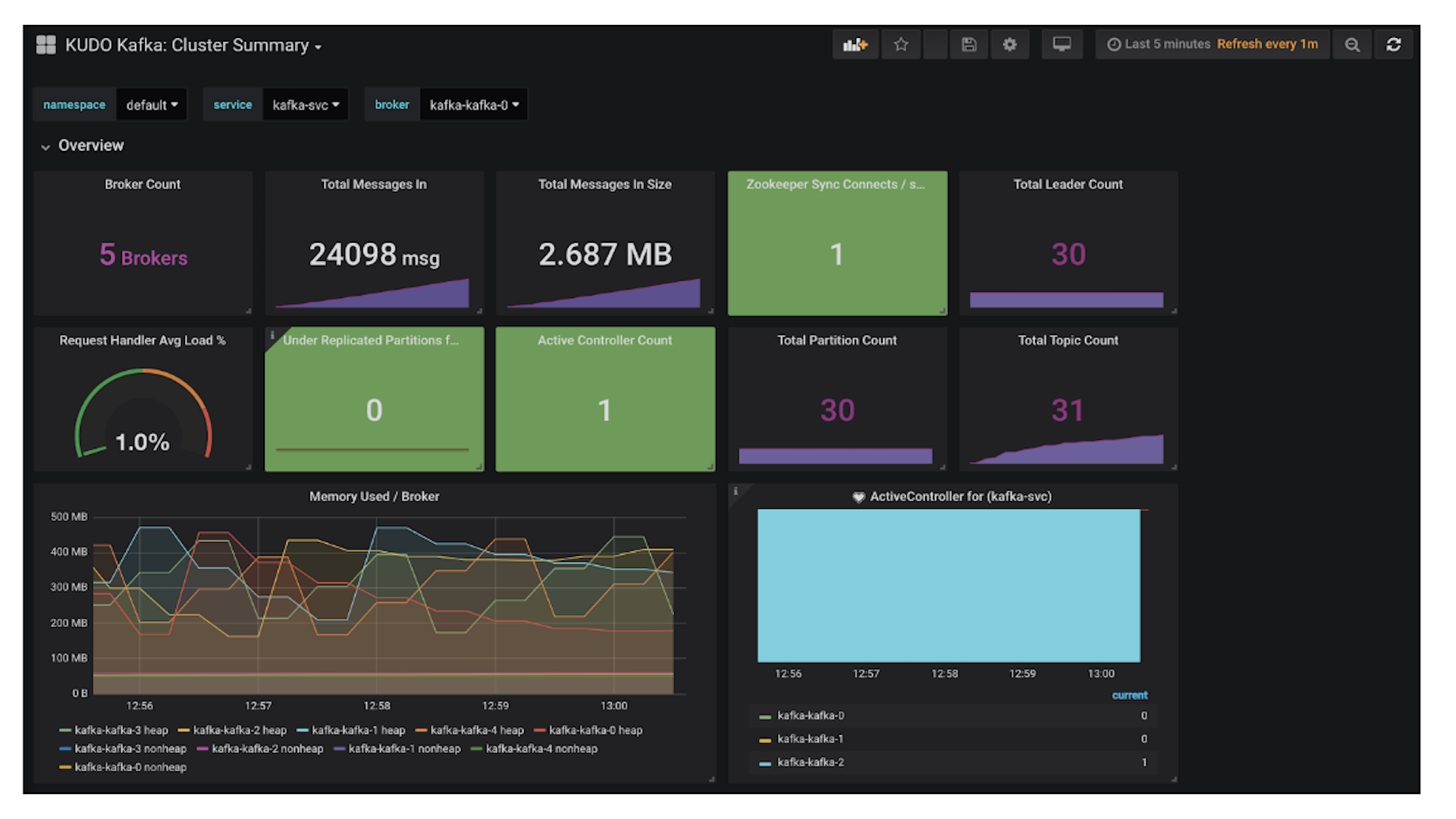

- Monitoring and Logging Kubernetes and its services — We already have logging and monitoring setup for our current DC/OS services but is there something additional we need to setup for K8s?

- Deep dive into how Kubernetes is running on DC/OS — Need to understand how this is actually running in DC/OS. I would assume just as every other framework. Is it using UCR? I can't hand over without a better all around understanding of how its running on DC/OS first.

Extremely Pleased with Kubernetes on DC/OS

In conclusion, I am extremely pleased with how easy it was to get a K8s cluster (HA'd) running and configured on DC/OS so far. Although there is still much to do and it has only been a few hours of poking around so far, I have not really had to put in much effort. Much was done with just a little bit of reading and executing a few commands. I am impressed with how well everything has worked just as a standalone Kubernetes cluster would. The only complaint that I have so far is figuring out how to interact with services running on Kubernetes from other services on DC/OS persistently (likely my oversight) [Editor's note:

documentation for external ingress to Kubernetes]. Outside of that, my first interaction went extremely well and I am overall extremely pleased. Hopefully I will get a chance soon to go through some of my "To Do" list and start sharing my findings.