For more than five years, DC/OS has enabled some of the largest, most sophisticated enterprises in the world to achieve unparalleled levels of efficiency, reliability, and scalability from their IT infrastructure. But now it is time to pass the torch to a new generation of technology: the D2iQ Kubernetes Platform (DKP). Why? Kubernetes has now achieved a level of capability that only DC/OS could formerly provide and is now evolving and improving far faster (as is true of its supporting ecosystem). That’s why we have chosen to sunset DC/OS, with an end-of-life date of October 31, 2021. With DKP, our customers get the same benefits provided by DC/OS and more, as well as access to the most impressive pace of innovation the technology world has ever seen. This was not an easy decision to make, but we are dedicated to enabling our customers to accelerate their digital transformations, so they can increase the velocity and responsiveness of their organizations to an ever-more challenging future. And the best way to do that right now is with DKP.

Note this blog post was originally posted here.

At the Vamp HQ in Amsterdam we get to talk to a lot of Docker, DC/OS and Kubernetes users. For us, as a startup in this corner of the tech space, talking to the engineers and architects behind some pretty serious container based cloud environments is of course worth the proverbial gold. It's also a lot of fun to just help people out.

Over the last months, listening to the DC/OS user group a specific pattern emerged:

- They have a Mesosphere DC/OS environment up and running.

- They have a CI/CD pipeline to deliver and deploy application builds.

- They are using the pre-packaged Marathon-LB to handle routing and load balancing.

From this, the question emerges how to add canary releasing and blue/green deployments to a Marathon-LB based DC/OS environment. You have roughly two options here, outside of building something yourself:

Option 1: Use Marathon-LB's Zero-downtime deployment Python script. This gives you a very basic, command line driven, tool to orchestrate blue/green deployments. However, as mentioned by the authors themselves, this tool is not production grade and is purely for demo purposes

Option 2: Use Vamp's gateway functionality in isolation, augmenting and leveraging your current Marathon-LB setup. This allows you to use Vamp's powerful weight and condition based routing with minimal changes in your current environment. Under the hood, Marathon-LB and Vamp gateways both use HAproxy for routing.

What is Vamp?

Vamp is an open source, self-hosted platform for managing (micro)service oriented architectures that rely on container technology. Vamp provides a DSL to describe services, their dependencies and required runtime environments in blueprints.

Vamp takes care of route updates, metrics collection and service discovery, so you can easily orchestrate complex deployment patterns, such as A/B testing and canary releases.

Prepare your DC/OS environment

To get up and running, let's take the following architecture diagram as a starting point.

In the diagram we can see:

- An edge load balancer routing traffic to Marathon-LB where Marathon-LB is installed on a public slave in a DC/OS cluster.

- Marathon-LB fronting one version of one app.

- When deploying, a new version of the app replaces the old version:In this scenario, you would deploy an application to Marathon using either the UI in DC/OS, or by simply posting a JSON file to Marathon using the DC/OS command line interface , i.e:

$ dcos marathon app add my-app.json

- Where the my-app.json looks similar to the JSON below:

{

"id": "/simple-service-1.0.0",

"container": {

"type": "DOCKER",

"docker": {

"image": "magneticio/simpleservice:1.0.0",

"network": "BRIDGE",

"portMappings": [

{

"containerPort": 3000,

"labels": {

"VIP_0": "/simple-service-1.0.0:3000"

}

}

],

}

},

"labels": {

"HAPROXY_0_MODE": "http",

"HAPROXY_0_VHOST": "simpleservice100.mycompany.com",

"HAPROXY_GROUP": "external"

}

}

- Crucial to notice here is how we expose this application. Also, this is where things might get confusing...

- By setting the VIP_0 to /simple-service-1.0.0:3000, we are creating a "load balanced service address" in DC/OS. This address is used by DC/OS's built in Minuteman layer 4 distributed load balancer. This is a completely separate routing solution from Marathon-LB that comes out of the box with every DC/OS setup.

- If you are configuring this using the DC/OS UI, you just flick the switch shown in the screenshot below. After this, you can pretty much forget about Minuteman, just take a note of the address that is created for you, in this example: simple-service-1.0.0.marathon.l4lb.thisdcos.directory:3000

- By setting the HAPROXY_0_VHOST in the labels, we are ALSO exposing this app to port 80 of the public agent where marathon-lb is running under the VHOST name simpleservice100.mycompany.com. This means — if our edge load balancer and possible intermediary firewalls are configured to allow this traffic — we could add a CNAME record to our public DNS with that VHOST name and point it to the public IP address of our edge load balancer to expose the app on the public internet.

- Strictly speaking, we don't need this for our scenario, but it can be very convenient to be able to access a specific version of our app using a descriptive name: you could also choose to use something like service.blue.mycompany.com and service.green.mycompany.com in two app deployments that respectively are two version of the same service. This way, you always have a lot of control on what version you are accessing for debugging purposes.

Install Vamp and set up Vamp Gateways

To leverage Vamp's Gateways and start using smart routing patterns, we are going to add two Vamp components to this architecture:

- Vamp itself, installable as a DC/OS Universe package. Make sure to also install the required Elasticsearch dependency. This allows Vamp to collect routing and health status metrics. Checkout our installation docs →

- One or more Vamp Gateway Agents (VGA's), also living on the public slave(s). Vamp can install this automatically on boot, but if you want to take control yourself, you can easily use this marathon json file as a starting point and deploy it yourself.

The resulting architecture will be as follows.

Notice, that we now use the Vamp managed Vamp Gateway Agent as the ingress point for our edge load balancer and let the VGA route traffic to our apps. This means the VGA now hosts your public service endpoint regardless of the version of the service you are running. Clients using this service or app should use the address and port as configured in the VGA.

Having said that, we didn't change anything to how Marathon-LB exposes endpoints. Next to the new VGA→Apps routing scheme, you can just keep using any existing routing directly into Marathon-LB.

However, from now on you will have to deploy any new version of your service as a separate and distinct deployment and not as a replacement / rolling-deploy of an existing older version. Each deploy then gets a load balanced address based on the version number or some other naming scheme you come up with to distinguish which version is which. You could easily have your CI pipeline generate names based on GIT hashes or semantic version numbers as shown below in the abbreviated example.

#

# marathon JSON of version 1.0.0

#

{

"id": "/simple-service-1.0.0",

"portMappings": [

{

"containerPort": 3000,

"labels": {

"VIP_0": "/simple-service-1.0.0:3000"

}.

..

}

#

# marathon JSON of version 1.1.0

#

{

"id": "/simple-service-1.1.0",

"portMappings": [

{

"containerPort": 3000,

"labels": {

"VIP_0": "/simple-service-1.1.0:3000"

}.

..

}

To get this setup running, we simply create a new gateway that listens, for instance, on port 8080 in Vamp. We add two routes to this gateway, each pointing to one specific service port managed by Marathon-LB. The traffic then flows as follows:

The Vamp artifact to create this is just this seven line piece of YAML where we have already split the traffic using the weights option. We post this YAML either to the Vamp API or just copy & paste it in the UI. You can see we just redirect traffic to the layer 4 addresses.

name: simpleservice

port: 8080/http

routes:

"[simple-service-1.0.0.marathon.l4lb.thisdcos.directory:3000]":

weight: 50%

"[simple-service-1.1.0.marathon.l4lb.thisdcos.directory:3000]":

weight: 50%

If you fire up the Vamp UI you can start manipulating traffic and executing canary release and blue/green release patterns using all of the functionality available in full Vamp installations. Alternatively, you can use the Vamp CLI to directly integrate these patterns into bash scripts or CI pipelines.

This scenario does come with a couple of caveats and gotchas which you should be aware of:

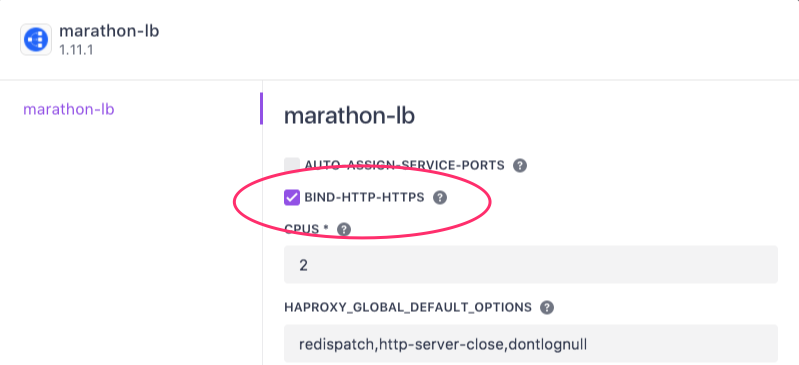

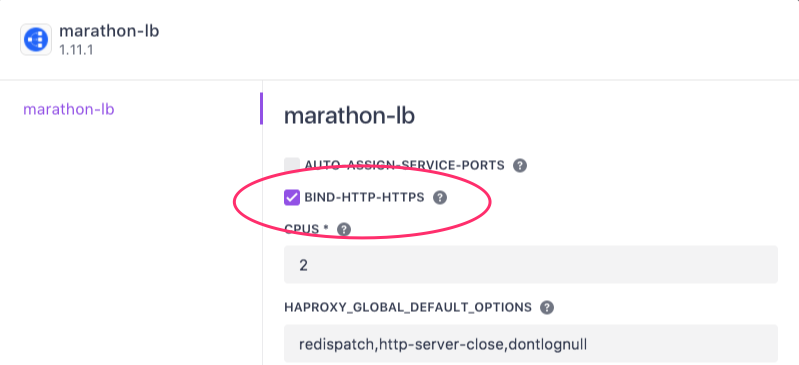

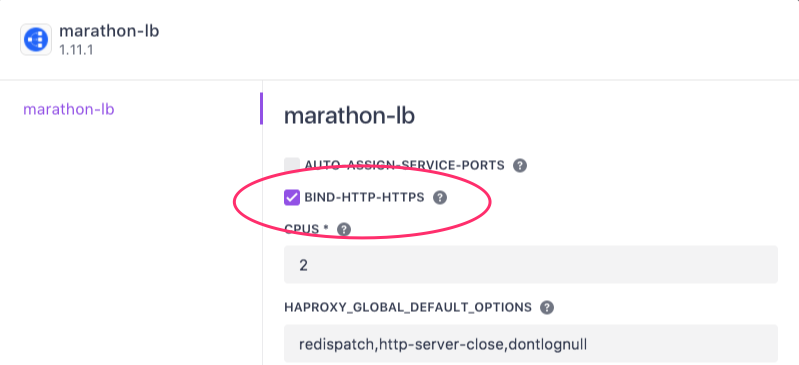

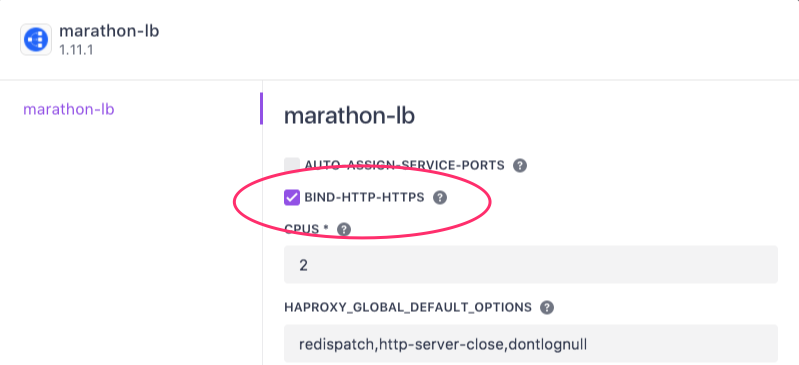

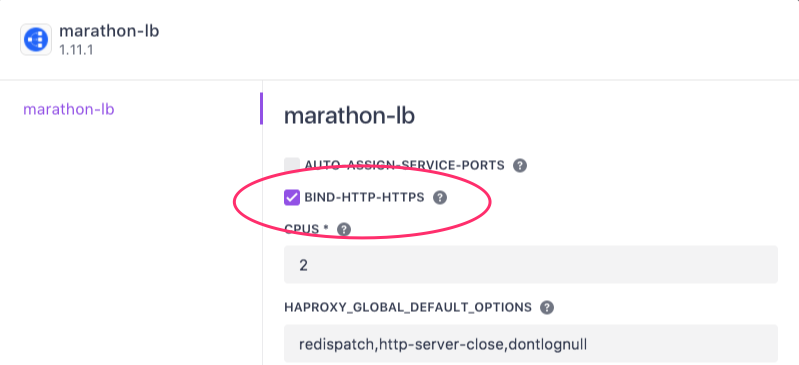

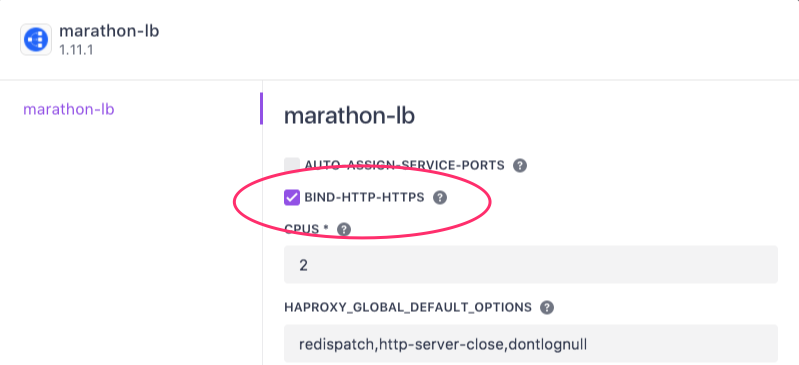

- We are effectively running two HAproxy instances on the same node. To avoid port conflicts we should establish a regime where, for instance, we assign the port range 8000–9999 to VGA and 10000–11000 to Marathon-LB. Moreover, if you want to expose any virtual hosts on ports 80 and/or 443 you will have to decide which routing solution (Marathon-LB or VGA) will "get to own" these ports. If you decide that Vamp's VGA should own these ports, make sure to unselect the bind-http-https option when installing Marathon-LB

- Because we are still deploying to Marathon outside of the Vamp orchestrator, the VGA does not "know" when a service is 100% deployed, booted and ready for traffic. This means we should be careful about sending traffic to new services directly after deploy: the might need some time to actually start answering requests.

Wrap up

Congratulations! You've just added powerful routing to your DC/OS cluster. Vamp comes with a lot of other goodies, but this first step is an easy way to get to know Vamp.

- Read more on how Vamp tackles routing and load balancing →

- Get started with condition based routing →

- Questions or troubles, head over to http://vamp.io/support/