Mesosphere Jupyter Service (MJS) provides Jupyter Notebooks-as-a-service with machine learning acceleration to dramatically lower costs.

7 min read

Machine learning is key to digital transformation and will become a competitive differentiator for enterprise organizations over the next years. Enterprises all over the world use artificial intelligence today, ranging from insurance, healthcare, to the automotive industry. While machine learning models present many opportunities for enterprises, there are many challenges when it comes to operationalizing them.

Moving quickly often introduces technical debt

When software engineers use code that is easy to implement in the short run, instead of applying the best overall solution, it can slow shipping speeds and can become increasingly difficult to implement changes later on, especially when there's frequent changes to data and models that need to be continuously updated. This hidden technical debt creates a tremendous amount of manual work to operationalize orchestration of distributed systems like Spark or TensorFlow.

Deployment requires many lines of DIY code

Deploying Jupyter Notebooks and interfacing with Spark or Distributed TensorFlow in a multi-tenant environment requires many lines of DIY code and a significant investment of time before even one single line of machine learning code can be written. An entire team can spend weeks to setup the underlying stack and yet running into many operational pitfalls that come with operating these environments.

Accelerate machine learning models with Mesosphere Jupyter Service (MJS)

The Mesosphere Jupyter Service (MJS), a new package addition to our DC/OS Service Catalog (notated in the Catalog as "JupyterLab"), is one of the first steps to help tackle some of these challenges and introduce operational best practices. The solution provides a self-service platform so that teams can focus on exploring their datasets, writing their algorithms, generating more experiments, and conducting their analysis rather than worrying about deploying and managing the underlying infrastructure. Frequent changes are made easy by continuous software delivery concepts that create an automated pipeline and fragility is reduced by applying production infrastructure operations best practices.

Thanks to OpenID connect authentication and authorization with support for Windows Integrated Authentication (WIA) and Active Directory Federation Services (ADFS), the Mesosphere Jupyter Service enables your business to adopt enterprise standards, across any infrastructure, while still enabling experimentation and developer choice.

The initial release provides JupyterLab, the next-generation web-based interface for Project Jupyter, with secured connectivity to data lakes and data sets on S3 and (Kerberized) HDFS as well as GPU-enabled Spark and distributed TensorFlow. With the JupyterLab package we deliver secure, cloud-native Jupyter Notebooks-as-a-Service to empower data scientists to perform analytics and distributed machine learning on elastic GPU-pools with access to big and fast data services.

This beta release has already been adopted by many of our customers to help them with machine learning acceleration and dramatically lower costs. The package comes pre-installed with a Swiss Army Knife of tools that makes a Data Scientist's life easier (see release notes).

If you're interested in learning how everything comes together with our TensorFlowOnSpark Notebook, read on for a detailed guide below.

TensorFlow with GPU support on Spark

Preparation

As mentioned earlier, the manual effort of managing and maintaining distributed systems can be time consuming and cumbersome. One tool we wrote to get you up and running quickly is terraform-dcos. It automates most of the efforts associated with provisioning cloud instances, DC/OS, or a Kubernetes cluster with a few simple commands. Mesosphere DC/OS is ready to run anywhere from laptops to massive cloud computing platforms.

To get started, first install a Mesosphere DC/OS Cluster with at least 11 agents (6 GPU and 5 private agents) on AWS. Here is an example desired_cluster_profile.tfvars to install DC/OS. Make sure you follow the Getting Started Guide in our DC/OS Terraform repo.

Once your cluster is up and running, then install Marathon-LB and HDFS as a prerequisite with default values.

Installing JupyterLab on Mesosphere DC/OS from Catalog

After all prerequisites are satisfied, the next step is tonstall JupyterLab on Mesosphere DC/OS as described in the Quick Start. The easiest way to deploy the service is via the UI. In the Mesosphere DC/OS Dashboard navigate to the Catalog tab and search for "jupyterlab."

HDFS on Mesosphere DC/OS demonstrates how easy it is to deploy such a complex distributed system on the platform. In order to make the JupyterLab package work with GPU support, Marathon-LB and HDFS, you'll need to make three adjustments:

- Enable GPU Support and allocating GPU resources to our notebook.

- Point to the AWS Elastic LoadBalancer vHost for your service under Networking [externally reachable vHost (e.g., the Public Agent ELB in an AWS environment)]. In my example the Public Agent ELB address translates to fabianbaie-tf3ac8-pub-agt-elb-1341462163.us-west-2.elb.amazonaws.com.

- Point to the HDFS Scheduler Service URL from which the HDFS configuration can be retrieved under Environments. When you install HDFS with default settings this translates to http://api.hdfs.marathon.l4lb.thisdcos.directory/v1/endpoints.

Before you run and deploy JupyterLab, you'll need to start the Spark History Server and TensorBoard services to enable monitoring and debugging.

You can also set a password to access JupyterLab notebooks under Environments. This is optional, but if the password is not set, it will automatically be set to jupyter.

Finally, after reviewing your configuration, click Run Service to install the JupyterLab configuration.

For those of you who are interested in more fine tuning please have a look at the installation section in the README.

While your image is deploying (deployment can take initially a while as the Docker image is ~5 GB), let's walkthrough the framework that is actually deploying your service, Marathon. Marathon is an Apache Mesos framework for container orchestration and helps you to deploy and manage applications on top of DC/OS. It is also used as a meta framework and allows you to start other frameworks (even itself) on top of it. You can easily reach the Marathon UI by pointing in your browser to its endpoint.

In the Marathon UI, change the Docker image to latest to ensure you are running the most recent MJS version.. Next, select your JupterLab-Notebook application, scale it to 0, apply your configuration changes, and scale it back to 1.

Once deployed you can connect to the JupyterLab notebook UI by pointing your browser to your vHost fabianbaie-tf86a5-pub-agt-elb-1194315762.us-west-2.elb.amazonaws.com/jupyterlab-notebook and enter your chosen dcos password to authenticate. The main Dashboard should show the JupyterLab Launcher:

Running the TensorFlowOnSpark Notebook

Before you hit the ground running, make sure you're familiar with JupyterLab. Play around with the Launcher View by adding a Python3 notebook and run hello-world in it. One very handy feature is the Terminal, which allows you to launch a terminal and run commands inside your container. This is very helpful for accessing data from s3 or clone repos from Github.

To get started, clone the dcos-jupyterlab-service repo that contains the TFoS notebook. In order to clone the repo, you need to launch a Terminal and type: git clone https://github.com/dcos-labs/dcos-jupyter-service

For our TensorFlowOnSpark example we train a Neural Network on the MNIST dataset which will come from HDFS. The Spark job that prepares our data is included in our notebook. Once you clone the repo, go ahead and launch our TFoS.ipynb notebook. Browse in our cloned dcos-jupyterlab-service repo in the notebooks folder and double click to launch the TFoS notebook:

So let's have a closer look at our notebook and run cell by cell. We run each cell by selecting it and pressing SHIFT+ENTER. Some cells will take longer, others are executing very fast.

The first cell just includes a bunch of imports:

The second cell cleans up your environment in case you have to start from scratch. It deletes the mnist data, model and predictions on HDFS as well as cleans up your Mesos sandbox from any artifacts:

The third and fourth cell are the equivalent of running the same commands in the Terminal. The third cell clones the TensorFlowOnSpark repo into your Mesos sandbox. This is needed later to access the mnist_dist.py and mnist_spark.py files to build your model. The fourth cell downloads the MNIST data artifact into your Mesos sandbox:

The fifth cell prepares the MNIST dataset in TFRecord file format which is a simple record-oriented binary format that many TensorFlow applications use for training data and stores it on our HDFS cluster which is the equivalent of the terminal command:

eval \

spark-submit \

${SPARK_OPTS} \

--verbose \

$(pwd)/tensorflowonspark/examples/mnist/mnist_data_setup.py \

--output mnist/tfr \

--format tfr

The sixth cell prepares the arguments that we parse into our mnist_dist.py later. We can always override those default value later but in general this should work out of the box. On thing to note is that the number of executors needs to match the number of available GPU agents in your cluster. Because we installed the cluster with 6 GPU agents where 1 agent is already allocated to Mesosphere Jupyter Service, this leaves us with 5 available GPU agents:

The 7th and 8th cell are configuring our SparkContext. You can easily comment or uncomment between those cells by pressing CMD+A+/. By running the GPU version of MJS (we enabled GPU during the install), it gives you the option to change the executor docker image by using the mesosphere/mesosphere-data-toolkit:latest-gpu image and assigning each executor 1 gpu:

The 9th cell creates the SparkContext and adds the mnist_dist.py file which is our distributed TensorFlow MNIST model as dependency to our context:

Now when we run this something interesting happens behind the scene. This will launch 5 Spark workers on top of Mesos that are ready to run your distributed TensorFlow. You can monitor the Mesos UI dashboard to check if all five Spark workers are running as pulling the image can take quite some staging time. Access the Mesos UI dashboard in another browser tab via https://<cluster-url>/mesos. Once you see all workers running come back to your Notebook tab and move on to the next cells:

In the 10th to 12th cells we are importing the mnist_dist.py file to our notebook as well as checking if our MNIST data was prepared and written correct to HDFS. The output should show you the existing files and folders in HDFS under mnist/tfr/train:

Now in cell 13th and 14th we parse the right arguments to train our model. This includes setting the mode, the epochs, steps or output folder of the model. By then executing cell 15th we are starting our distributed TensorFlow on the Spark executors with our training:

At the same time you can monitor the progress of your Spark jobs in accessing the Sparkmonitor UI by removing the /lab? at the end of your Notebook URLand add /sparkmonitor instead:

Similarly, you can look at the progress of our training in TensorBoard via accessing the TensorBoard endpoint /tensorboard. Your TensorFlow Training progress should look like this:

Check in the 16th cell if our model was successfully written to HDFS:

Similar to cell 13th and 14th the next two cells (17th and 18th) will run our TensorFlow nodes but this time in the inference mode to predict the actual numbers. Again, have a look while this is running in your Sparkmonitor tab and see the progress:

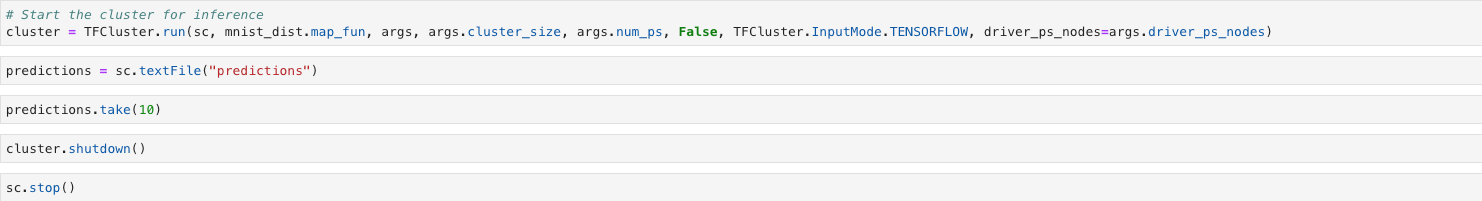

Now with cell 19th and 20th you'll be able to see some of those predictions and its accuracy. The last two cells are there to shut your TensorFlow cluster down as well as stopping our SparkContext:

Now you can see how easy it is to run Apache Spark and distributed TensorFlow within a JupyterLab Notebook on Mesosphere. Without Mesosphere Jupyter Service data science teams would not be empowered to innovate fast, in a powerful, scalable, and efficient environment. In the coming weeks we will add more example notebooks and encourage everyone to contribute their own notebooks and examples.