7 min read

This is the second of a 2-part series on service discovery and load balancing.

In the previous post we discussed the basics of setting up marathon-lb. In this post, we'll explore some more advanced features.

Marathon-lb works by automatically generating configuration for HAProxy and then reloading HAProxy as needed. Marathon-lb generates the HAProxy configuration based on application data available from the Marathon REST API. It can also subscribe to the Marathon Event Bus for real-time updates. When an application starts, stops, relocates or has any change in health status, marathon-lb will automatically regenerate the HAProxy configuration and reload HAProxy.

Marathon-lb has a templating feature for specifying arbitrary HAProxy configuration parameters. Templates can be set either globally (for all apps), or on a per-app basis using labels. Let's demonstrate an example of how to specify our own global template. Here's the template we'll use:

global daemon log /dev/log local0 log /dev/log local1 notice maxconn 4096 tune.ssl.default-dh-param 2048defaults log global retries 3 maxconn 3000 timeout connect 5s timeout client 30s timeout server 30s option redispatchlisten stats bind 0.0.0.0:9090 balance mode http stats enable monitor-uri /_haproxy_health_check

In the example above, we've changed these items from the defaults: maxconn, timeout client, and timeout server. Next, create a file called HAPROXY_HEAD in a directory called templates with the contents above. Then, tar or zip the file. Here's a handy script you can use to do this.

Take the file you created (templates.tgz if you use the script), and make it available from an HTTP server. If you'd like to use the sample one, use this URI: https://downloads.mesosphere.io/marathon/marathon-lb/templates.tgz

Next, we'll augment the marathon-lb config by saving this in a file called options.json:

{ "marathon-lb":{ "template-url":"https://downloads.mesosphere.io/marathon/marathon-lb/templates.tgz" }}Launch the new marathon-lb:

$ dcos package install --options=options.json marathon-lb

Et voilà! Our customized marathon-lb HAProxy instance should be running with the new template. A full list of the templates available can be found here.

What's next? How about specifying per-app templates? Here's an example using our faithful nginx:

{ "id": "nginx-external", "container": { "type": "DOCKER", "docker": { "image": "nginx:1.7.7", "network": "BRIDGE", "portMappings": [ { "hostPort": 0, "containerPort": 80, "servicePort": 10000 } ], "forcePullImage":true } }, "instances": 1, "cpus": 0.1, "mem": 65, "healthChecks": [{ "protocol": "HTTP", "path": "/", "portIndex": 0, "timeoutSeconds": 10, "gracePeriodSeconds": 10, "intervalSeconds": 2, "maxConsecutiveFailures": 10 }], "labels":{ "HAPROXY_GROUP":"external", "HAPROXY_0_BACKEND_HTTP_OPTIONS":" option forwardfor\n no option http-keep-alive\n http-request set-header X-Forwarded-Port %[dst_port]\n http-request add-header X-Forwarded-Proto https if { ssl_fc }\n" }}In the example above, we've modified the default template to disable HTTP keep-alive. While this is a contrived example, there may be cases where you need to override certain defaults per-application.

Other options you may want to specify include enabling the sticky option, redirecting to HTTPS, or specifying a vhost.

"labels":{ "HAPROXY_0_STICKY":true, "HAPROXY_0_REDIRECT_TO_HTTPS":true, "HAPROXY_0_VHOST":"nginx.mesosphere.com"}SSL Support

Marathon-lb supports SSL, and you may specify multiple SSL certs per frontend. Additional SSL certs can be included by passing a list of paths with the extra --ssl-certs command line flag. You can inject your own SSL cert into the marathon-lb config by specifying the HAPROXY_SSL_CERT environment variable.

If you do not specify an SSL cert, marathon-lb will generate a self-signed cert at startup. If you're using multiple SSL certs, you can select the SSL cert per app service port by specifying the HAPROXY_{n}_SSL_CERT parameter, which corresponds to the file path for the SSL certs specified. For example, you might have:

"labels":{ "HAPROXY_0_VHOST":"nginx.mesosphere.com", "HAPROXY_0_SSL_CERT":"/etc/ssl/certs/nginx.mesosphere.com"}The SSL certs must be pre-loaded into the container for marathon-lb to load them (you can do this by building your own image of marathon-lb, rather than using the Mesosphere provided image). If an SSL cert is not supplied, a self-signed cert will be generated at startup.

Using HAProxy metrics

HAProxy's statistics report can be used to monitor health, performance, and even make scheduling decisions. HAProxy's data consists of counters and 1-second rates for various metrics.

For this post, we'll use HAProxy's data for a fun application: demonstrating an implementation of Marathon app autoscaling.

The principle is simple: for a given app, we can measure its performance in terms of requests per second for a given set of resources. If the app is stateless and scales horizontally, we can then scale the number of app instances proportionally to the number of requests per second averaged over N intervals. The autoscale script polls the HAProxy stats endpoint and automatically scales app instances based on the incoming requests.

The script doesn't do anything clever—it simply takes the current RPS (requests per second), and divides that number by the target RPS per app instance. The result of this fraction is the number of app instances required (or rather, the ceiling of that fraction is the instances required).

To demonstrate autoscaling, we're going to use 3 separate Marathon apps:

- marathon-lb-autoscale - the script which monitors HAProxy and scales our app via the Marathon REST API.

- nginx - our demo app

- siege - a tool for generating HTTP requests

Let's begin by running marathon-lb-autoscale. The JSON app definition can be found here. Save the file, and launch it on Marathon:

$ wget https://gist.githubusercontent.com/brndnmtthws/2ca7e10b985b2ce9f8ee/raw/66cbcbe171afc95f8ef49b70034f2842bfdb0aca/marathon-lb-autoscale.json$ dcos marathon app add marathon-lb-autoscale.json

Let's examine the definition a bit:

"args":[ "--marathon", "http://leader.mesos:8080", "--haproxy", "http://marathon-lb.marathon.mesos:9090", "--target-rps", "100", "--apps", "nginx_10000"],

Note: If you're not already running an external marathon-lb instance, launch it with dcos package install marathon-lb.

Above, we're passing 2 important arguments to the tool: --target-rps tells marathon-lb-autoscale what the target RPS we seek is, and --apps is a comma-separated list of the Marathon apps and service ports to monitor, concatenated with _. Each app could expose multiple service ports to the LB if configured to do so, and marathon-lb-autoscale will scale the app to meet the greatest common denominator for the number of required instances.

Next, we'll launch our nginx test instance. The JSON app definition can be found here. Save the file, and launch with:

$ wget https://gist.githubusercontent.com/brndnmtthws/84d0ab8ac057aaacba05/raw/d028fa9477d30b723b140065748e43f8fd974a84/nginx.json$ dcos marathon app add nginx.json

And finally, let's launch siege, a tool for generating HTTP request traffic. The JSON app definition can be found here. Save the file, and launch with:

$ wget https://gist.githubusercontent.com/brndnmtthws/fe3fb0c13c19a96c362e/raw/32280a39e1a8a6fe2286d746b0c07329fedcb722/siege.json$ dcos marathon app add siege.json

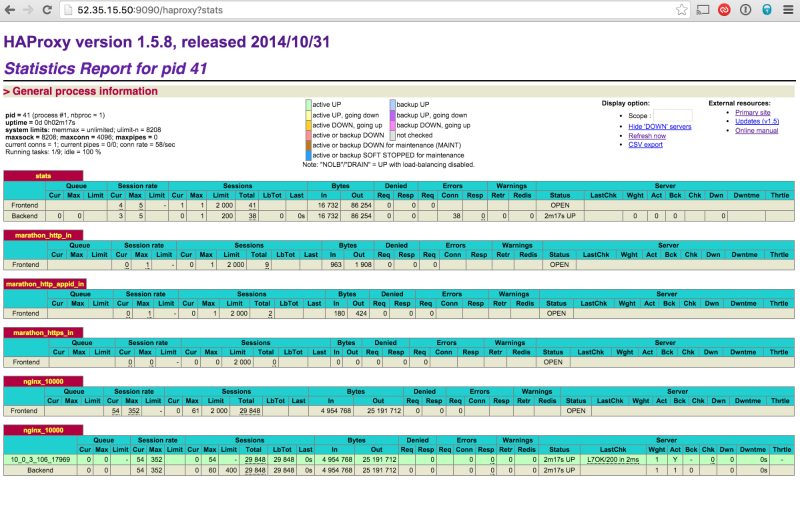

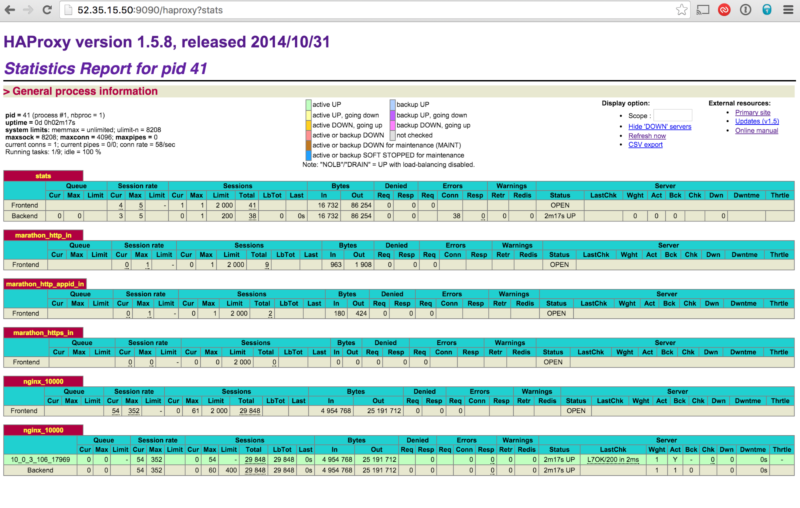

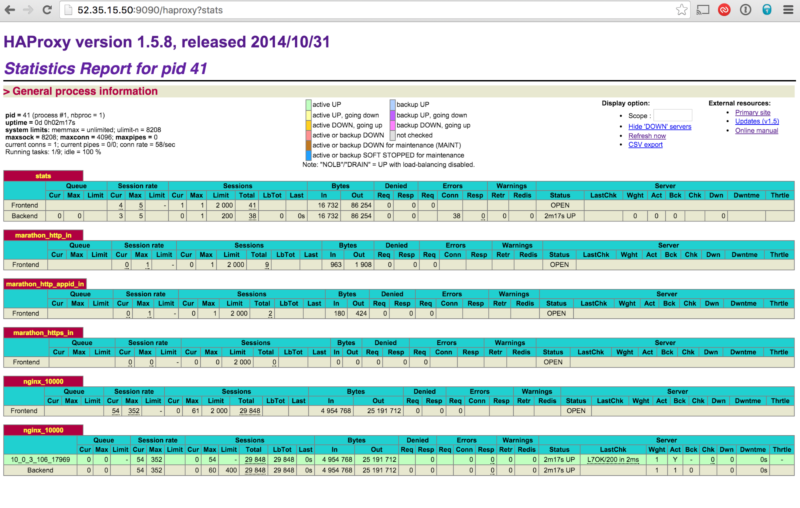

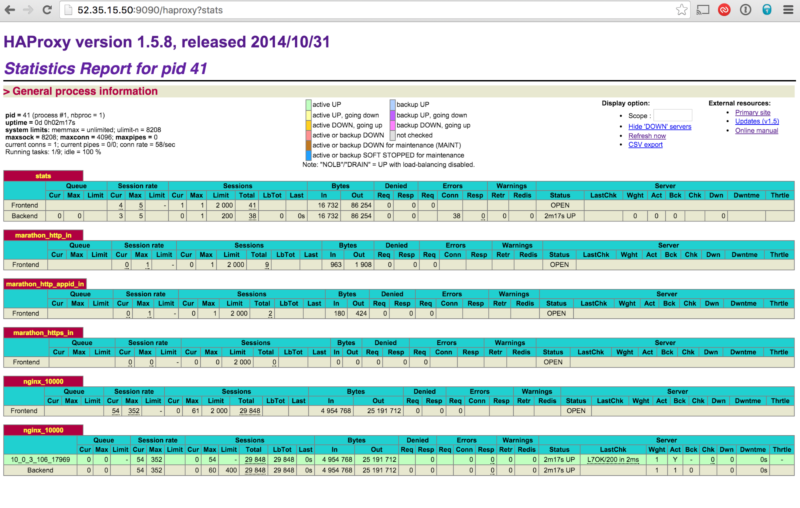

Now, if you check the HAProxy status page, you should see requests hitting the nginx instance:

Under the "Session rate" section, you can see we're currently getting about 54 requests per second on the nginx fronted.

Next, scale the siege app so that we generate a bunch of HTTP requests:

$ dcos marathon app update /siege instances=15

Now wait a few minutes—go grab a coffee, tea, muffin, steak or whatever your vice may be. You'll notice after a couple of minutes that the nginx app has been automatically scaled up to serve the increased traffic.

Next, try experimenting with the parameters for marathon-lb-autoscale (which are documented here). Try changing the interval, number of samples, and other values until you achieve the desired effect. The default values are fairly conservative, which may or may not meet your expectations. It's suggested that you include a 50 percent safety factor in the target RPS. For example, if you measure your application as being able to meet SLAs at 1500 RPS with 1 CPU and 1GiB of memory, you may want to set the target RPS to 1000.

That's all! Congratulations on reaching the end.